Mapping public perception of artificial intelligence: Expectations, risk–benefit tradeoffs, and value as determinants for societal acceptance

IF 13.3

1区 管理学

Q1 BUSINESS

Technological Forecasting and Social Change

Pub Date : 2025-08-16

DOI:10.1016/j.techfore.2025.124304

引用次数: 0

Abstract

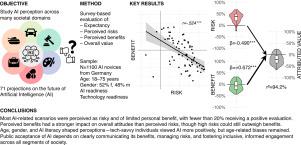

Public opinion on artificial intelligence (AI) plays a pivotal role in shaping trust and AI alignment, ethical adoption, and the development equitable policy frameworks. This study investigates expectations, risk–benefit tradeoffs, and value assessments as determinants of societal acceptance of AI. Using a nationally representative sample (N = 1100) from Germany, we examined mental models of AI and potential biases. Participants evaluated 71 AI-related scenarios across domains such as autonomous driving, medical care, art, politics, warfare, and societal divides, assessing their expected likelihood, perceived risks, benefits, and overall value. We present ranked evaluations alongside visual mappings illustrating the risk–benefit tradeoffs. Our findings suggest that while many scenarios were considered likely, they were often associated with high risks, limited benefits, and low overall value. Regression analyses revealed that 96.5% () of the variance in value judgments was explained by risks () and, more strongly, benefits (), with no significant relationship to expected likelihood. Demographics and personality traits, including age, gender, and AI readiness, influenced perceptions, highlighting the need for targeted AI literacy initiatives. These findings offer actionable insights for researchers, developers, and policymakers, highlighting the need to communicate tangible benefits and address public concerns to foster responsible and inclusive AI adoption. Future research should explore cross-cultural differences and longitudinal changes in public perception to inform global AI governance.

绘制公众对人工智能的看法:期望、风险-收益权衡以及作为社会接受度决定因素的价值

关于人工智能(AI)的公众舆论在塑造信任和人工智能一致性、道德采用以及制定公平的政策框架方面发挥着关键作用。本研究调查了社会对人工智能接受程度的决定因素——期望、风险-收益权衡和价值评估。使用来自德国的具有全国代表性的样本(N = 1100),我们检查了人工智能的心理模型和潜在的偏见。参与者评估了71个与人工智能相关的场景,涉及自动驾驶、医疗、艺术、政治、战争和社会分歧等领域,评估了它们的预期可能性、感知风险、收益和总体价值。我们提出了排名评估与视觉映射说明风险-收益权衡。我们的研究结果表明,虽然许多情况被认为是可能的,但它们通常与高风险、有限的收益和低整体价值相关。回归分析显示,96.5% (r2=0.965)的价值判断方差由风险(β= - 0.490)和收益(β=+0.672)解释,与预期似然无显著关系。人口统计和人格特征,包括年龄、性别和人工智能准备程度,都会影响人们的看法,这凸显了有针对性的人工智能扫盲计划的必要性。这些发现为研究人员、开发人员和政策制定者提供了可操作的见解,强调了沟通实际利益和解决公众关切的必要性,以促进负责任和包容性的人工智能采用。未来的研究应该探索跨文化差异和公众认知的纵向变化,为全球人工智能治理提供信息。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

CiteScore

21.30

自引率

10.80%

发文量

813

期刊介绍:

Technological Forecasting and Social Change is a prominent platform for individuals engaged in the methodology and application of technological forecasting and future studies as planning tools, exploring the interconnectedness of social, environmental, and technological factors.

In addition to serving as a key forum for these discussions, we offer numerous benefits for authors, including complimentary PDFs, a generous copyright policy, exclusive discounts on Elsevier publications, and more.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: