Diagnosis and Subtyping of Autoimmune Encephalitis Using an Attention-Based Multi-Instance Learning Model: A Multi-Center 18F-FDG PET Study

Abstract

Background

The aim was to develop an attention-based model using 18F-fluorodeoxyglucose (18F-FDG) PET imaging to differentiate autoimmune encephalitis (AE) patients from controls and to discriminate among different AE subtypes.

Methods

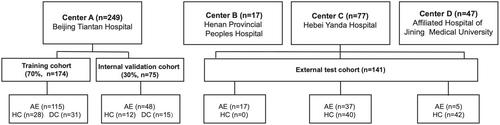

This multi-center retrospective study enrolled 390 participants: 222 definite AE patients (comprising four subtypes: LGI1-AE, NMDAR-AE, GABAB-AE, GAD65-AE), 122 age- and sex-matched healthy controls, and 33 age- and sex-matched antibody-negative AE patients along with 13 age- and sex-matched viral encephalitis patients, both serving as disease controls. An attention-based multi-instance learning (MIL) model was trained using data from one hospital and underwent external validation with data from other institutions. Additionally, a multi-modal MIL (m-MIL) model integrating imaging features, age, and sex parameters was evaluated alongside logistic regression (LR) and random forest (RF) models for comparative analysis.

Results

The attention-based m-MIL model outperformed classical algorithms (LR, RF) and single-modal MIL in AE vs. all controls binary classification, achieving the highest accuracy (84.00% internal, 67.38% external) and sensitivity (90.91% internal, 71.19% external). For multiclass AE subtype classification, the MIL-based model achieved 95.05% (internal) and 77.97% (external) accuracy. Heatmap analysis revealed that NMDAR-AE involved broader brain regions, including the medial temporal lobe (MTL) and basal ganglia (BG), whereas LGI1-AE and GABAB-AE showed focal attention on the MTL and BG. In contrast, GAD65-AE demonstrated concentrated attention exclusively in the MTL.

Conclusion

The m-MIL model effectively discriminates AE patients from controls and enables subtyping of different AE subtypes, offering a valuable diagnostic tool for the clinical assessment and classification of AE.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: