Evaluating and advancing large language models for nanofiltration membrane knowledge tasks

IF 9.5

引用次数: 0

Abstract

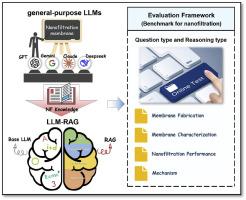

Nanofiltration (NF) is a rapidly growing field, resulting in a surge of publications with diverse focuses. It's challenging for researchers to quickly find key information from the vast amount of publications. Large language models (LLMs) have shown promise in analyzing article and reasoning about knowledge in some scientific fields, but their effectiveness in membrane research is unclear. Here, we introduced the first benchmark specifically designed for membrane studies and used it to systematically evaluate six general-purpose LLMs (i.e., Claude-3.5, Deepseek-R1, Gemini-2.0, GPT-4o-mini, Llama-3.2, and Mistral-small-3.1). Our findings revealed that the complexity and depth of NF knowledge pose a significant challenge for these LLMs, leading to poor performance, particularly in tasks involving membrane mechanisms. To enhance LLMs' using in this field, we developed a specialized NF database and integrated it with the LLMs using Retrieval-Augmented Generation (RAG). RAG significantly improved performance across all models, with average gains of 18.5 % on Question type tasks and 10.8 % on Reasoning type tasks. Moreover, in areas such as membrane fabrication and characterization, several models with RAG demonstrated performance exceeding that of human experts. These results suggested that RAG is a promising strategy for leveraging LLMs in NF research. This study introduced a new path for applying LLMs to membrane research and proposes a professional benchmark to ensure the reliable and effective use of LLMs.

评估和改进纳滤膜知识任务的大型语言模型

纳滤(NF)是一个快速发展的领域,引起了各种关注的出版物激增。对于研究人员来说,从大量的出版物中快速找到关键信息是一项挑战。大型语言模型(llm)在一些科学领域的文章分析和知识推理方面显示出了前景,但它们在膜研究中的有效性尚不清楚。在这里,我们引入了第一个专门为膜研究设计的基准,并使用它系统地评估了六种通用llm(即Claude-3.5、Deepseek-R1、Gemini-2.0、gpt - 40 -mini、Llama-3.2和Mistral-small-3.1)。我们的研究结果表明,NF知识的复杂性和深度对这些llm构成了重大挑战,导致性能不佳,特别是在涉及膜机制的任务中。为了提高法学硕士在这一领域的应用,我们开发了一个专门的NF数据库,并使用检索增强生成(RAG)将其与法学硕士集成在一起。RAG显著提高了所有模型的性能,在Question类型任务上的平均增益为18.5%,在Reasoning类型任务上的平均增益为10.8%。此外,在膜制造和表征等领域,一些具有RAG的模型表现出超过人类专家的性能。这些结果表明,RAG是利用法学硕士进行NF研究的一种有前途的策略。本研究为llm在膜研究中的应用提供了一条新的途径,并提出了一个专业的基准,以确保llm的可靠和有效使用。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: