Efficient human–object-interaction (EHOI) detection via interaction label coding and Conditional Decision

IF 3.5

3区 计算机科学

Q2 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

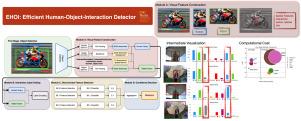

Human–Object Interaction (HOI) detection is a fundamental task in image understanding. While deep-learning-based HOI methods provide high performance in terms of mean Average Precision (mAP), they are computationally expensive and opaque in training and inference processes. An Efficient HOI (EHOI) detector is proposed in this work to strike a good balance between detection performance, inference complexity, and mathematical transparency. EHOI is a two-stage method. In the first stage, it leverages a frozen object detector to localize the objects and extract various features as intermediate outputs. In the second stage, the first-stage outputs predict the interaction type using the XGBoost classifier. Our contributions include the application of error correction codes (ECCs) to encode rare interaction cases, which reduces the model size and the complexity of the XGBoost classifier in the second stage. Additionally, we provide a mathematical formulation of the relabeling and decision-making process. Apart from the architecture, we present qualitative results to explain the functionalities of the feedforward modules. Experimental results demonstrate the advantages of ECC-coded interaction labels and the excellent balance of detection performance and complexity of the proposed EHOI method. The codes are available: https://github.com/keevin60907/EHOI---Efficient-Human-Object-Interaction-Detector.

基于交互标签编码和条件决策的高效人-物交互检测

人-物交互(HOI)检测是图像理解中的一项基本任务。虽然基于深度学习的HOI方法在平均平均精度(mAP)方面提供了高性能,但它们在训练和推理过程中计算成本高且不透明。本文提出了一种高效的HOI (EHOI)检测器,在检测性能、推理复杂性和数学透明性之间取得了良好的平衡。EHOI是一个两阶段的方法。在第一阶段,它利用冻结对象检测器来定位对象并提取各种特征作为中间输出。在第二阶段,第一阶段的输出使用XGBoost分类器预测交互类型。我们的贡献包括应用纠错码(ECCs)来编码罕见的交互情况,这在第二阶段减少了模型的大小和XGBoost分类器的复杂性。此外,我们提供了一个数学公式的重新标签和决策过程。除了架构外,我们还提供定性结果来解释前馈模块的功能。实验结果证明了ecc编码交互标签的优势,以及所提出的EHOI方法在检测性能和复杂度方面的良好平衡。代码可从https://github.com/keevin60907/EHOI---Efficient-Human-Object-Interaction-Detector获取。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Computer Vision and Image Understanding

工程技术-工程:电子与电气

CiteScore

7.80

自引率

4.40%

发文量

112

审稿时长

79 days

期刊介绍:

The central focus of this journal is the computer analysis of pictorial information. Computer Vision and Image Understanding publishes papers covering all aspects of image analysis from the low-level, iconic processes of early vision to the high-level, symbolic processes of recognition and interpretation. A wide range of topics in the image understanding area is covered, including papers offering insights that differ from predominant views.

Research Areas Include:

• Theory

• Early vision

• Data structures and representations

• Shape

• Range

• Motion

• Matching and recognition

• Architecture and languages

• Vision systems

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: