SIMNet: an infrared image action recognition network based on similarity evaluation

Abstract

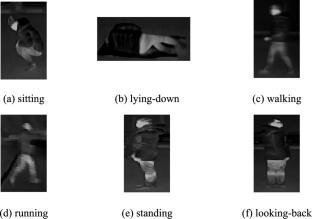

Infrared sensors are widely used in human action recognition because of their low light influence and excellent privacy protection. However, the traditional deep learning networks and training or testing methods tend to fall into the trap of local optimum because of the similarity between infrared image classes and the lack of discriminative features such as texture and depth, and thus obtain poor recognition results. To address this issue, we propose a novel human action recognition method based on similarity evaluation. This method innovatively transforms the traditional training and testing (verification) mode. First, we use a feature-to-feature training method to make the network pay more attention to the behavioral information that distinguishes the classes. Second, we design a Integrate Channel Attention Module(ICA) to enable Siamese network to focus on the areas of interest. Finally, we propose the Multimodal Similarity Evaluation Module (MSE). The module aims to address the fuzzy matching problem of feature areas. The contrast experiment results show that our method outperforms existing mainstream methods on several benchmark datasets. The excellent accuracy provides an innovative method for addressing various problems related to high similarity between classes.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: