An index-free sparse neural network using two-dimensional semiconductor ferroelectric field-effect transistors

IF 40.9

1区 工程技术

Q1 ENGINEERING, ELECTRICAL & ELECTRONIC

引用次数: 0

Abstract

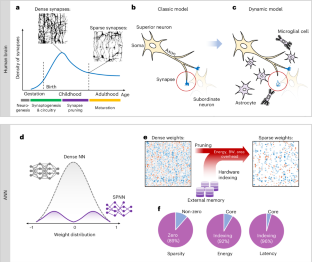

The fine-grained dynamic sparsity in biological synapses is an important element in the energy efficiency of the human brain. Emulating such sparsity in an artificial system requires off-chip memory indexing, which has a considerable energy and latency overhead. Here, we report an in-memory sparsity architecture in which index memory is moved next to individual synapses, creating a sparse neural network without external memory indexing. We use a compact building block consisting of two non-volatile ferroelectric field-effect transistors acting as a digital sparsity and an analogue weight. The network is formulated as the Hadamard product of the sparsity and weight matrices, and the hardware, which is comprised of 900 ferroelectric field-effect transistors, is based on wafer-scale chemical-vapour-deposited molybdenum disulfide integrated through back-end-of-line processes. With the system, we demonstrate key synaptic processes—including pruning, weight update and regrowth—in an unstructured and fine-grained manner. We also develop a vectorial approximate update algorithm and optimize training scheduling. Through this software–hardware co-optimization, we achieve 98.4% accuracy in an EMNIST letter recognition task under 75% sparsity. Simulations on large neural networks show a tenfold reduction in latency and a ninefold reduction in energy consumption when compared with a dense network of the same performance. Ferroelectric field-effect transistors based on molybdenum disulfide can be used to build an in-memory sparsity architecture in which index memory is moved next to individual synapses, creating a sparse neural network without external memory indexing.

利用二维半导体铁电场效应晶体管的无索引稀疏神经网络

生物突触的细粒度动态稀疏性是影响人类大脑能量效率的重要因素。在人工系统中模拟这种稀疏性需要片外存储器索引,这有相当大的能量和延迟开销。在这里,我们报告了一个内存稀疏架构,其中索引内存移动到单个突触旁边,创建了一个没有外部内存索引的稀疏神经网络。我们使用由两个非易失性铁电场效应晶体管组成的紧凑构建块作为数字稀疏性和模拟权重。该网络被表示为稀疏矩阵和权矩阵的Hadamard积,硬件由900个铁电场效应晶体管组成,基于通过后端工艺集成的晶片级化学气相沉积二硫化钼。通过该系统,我们以非结构化和细粒度的方式演示了关键的突触过程,包括修剪、权重更新和再生。我们还开发了一种向量近似更新算法并优化了训练计划。通过这种软硬件协同优化,我们在75%稀疏度下的EMNIST字母识别任务中达到了98.4%的准确率。在大型神经网络上的模拟显示,与具有相同性能的密集网络相比,延迟减少了10倍,能耗减少了9倍。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Nature Electronics

Engineering-Electrical and Electronic Engineering

CiteScore

47.50

自引率

2.30%

发文量

159

期刊介绍:

Nature Electronics is a comprehensive journal that publishes both fundamental and applied research in the field of electronics. It encompasses a wide range of topics, including the study of new phenomena and devices, the design and construction of electronic circuits, and the practical applications of electronics. In addition, the journal explores the commercial and industrial aspects of electronics research.

The primary focus of Nature Electronics is on the development of technology and its potential impact on society. The journal incorporates the contributions of scientists, engineers, and industry professionals, offering a platform for their research findings. Moreover, Nature Electronics provides insightful commentary, thorough reviews, and analysis of the key issues that shape the field, as well as the technologies that are reshaping society.

Like all journals within the prestigious Nature brand, Nature Electronics upholds the highest standards of quality. It maintains a dedicated team of professional editors and follows a fair and rigorous peer-review process. The journal also ensures impeccable copy-editing and production, enabling swift publication. Additionally, Nature Electronics prides itself on its editorial independence, ensuring unbiased and impartial reporting.

In summary, Nature Electronics is a leading journal that publishes cutting-edge research in electronics. With its multidisciplinary approach and commitment to excellence, the journal serves as a valuable resource for scientists, engineers, and industry professionals seeking to stay at the forefront of advancements in the field.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: