CUAHN-VIO: Content-and-uncertainty-aware homography network for visual-inertial odometry

IF 4.3

2区 计算机科学

Q1 AUTOMATION & CONTROL SYSTEMS

引用次数: 0

Abstract

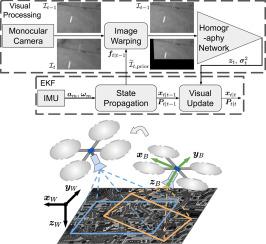

Learning-based visual ego-motion estimation is promising yet not ready for navigating agile mobile robots in the real world. In this article, we propose CUAHN-VIO, a robust and efficient monocular visual-inertial odometry (VIO) designed for micro aerial vehicles (MAVs) equipped with a downward-facing camera. The vision frontend is a content-and-uncertainty-aware homography network (CUAHN). Content awareness measures the robustness of the network toward non-homography image content, e.g. 3-dimensional objects lying on a planar surface. Uncertainty awareness refers that the network not only predicts the homography transformation but also estimates the prediction uncertainty. The training requires no ground truth that is often difficult to obtain. The network has good generalization that enables “plug-and-play” deployment in new environments without fine-tuning. A lightweight extended Kalman filter (EKF) serves as the VIO backend and utilizes the mean prediction and variance estimation from the network for visual measurement updates. CUAHN-VIO is evaluated on a high-speed public dataset and shows rivaling accuracy to state-of-the-art (SOTA) VIO approaches. Thanks to the robustness to motion blur, low network inference time (23 ms), and stable processing latency (26 ms), CUAHN-VIO successfully runs onboard an Nvidia Jetson TX2 embedded processor to navigate a fast autonomous MAV.

视觉惯性里程计的内容和不确定性感知单应性网络

基于学习的视觉自我运动估计很有前途,但还没有准备好在现实世界中导航敏捷移动机器人。在这篇文章中,我们提出了一种鲁棒和高效的单目视觉惯性里程计(VIO),设计用于配备向下摄像头的微型飞行器(MAVs)。视觉前端是一个内容和不确定性感知的同形词网络(CUAHN)。内容感知测量网络对非单应性图像内容的鲁棒性,例如平面上的三维物体。不确定性感知是指网络在预测单应性变换的同时,对预测的不确定性进行估计。这种训练不需要通常难以获得的基础真理。该网络具有良好的泛化能力,无需微调即可在新环境中进行“即插即用”部署。一个轻量级的扩展卡尔曼滤波器(EKF)作为VIO后端,利用来自网络的均值预测和方差估计进行视觉测量更新。CUAHN-VIO在高速公共数据集上进行了评估,并显示出与最先进的(SOTA) VIO方法相媲美的准确性。由于对运动模糊的鲁棒性,低网络推断时间(~ 23 ms)和稳定的处理延迟(~ 26 ms), CUAHN-VIO成功地在Nvidia Jetson TX2嵌入式处理器上运行,以导航快速自主MAV。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Robotics and Autonomous Systems

工程技术-机器人学

CiteScore

9.00

自引率

7.00%

发文量

164

审稿时长

4.5 months

期刊介绍:

Robotics and Autonomous Systems will carry articles describing fundamental developments in the field of robotics, with special emphasis on autonomous systems. An important goal of this journal is to extend the state of the art in both symbolic and sensory based robot control and learning in the context of autonomous systems.

Robotics and Autonomous Systems will carry articles on the theoretical, computational and experimental aspects of autonomous systems, or modules of such systems.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: