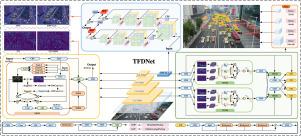

TFDNet: A triple focus diffusion network for object detection in urban congestion with accurate multi-scale feature fusion and real-time capability

IF 5.2

2区 计算机科学

Q1 COMPUTER SCIENCE, INFORMATION SYSTEMS

Journal of King Saud University-Computer and Information Sciences

Pub Date : 2024-11-01

DOI:10.1016/j.jksuci.2024.102223

引用次数: 0

Abstract

Vehicle detection in congested urban scenes is essential for traffic control and safety management. However, the dense arrangement and occlusion of multi-scale vehicles in such environments present considerable challenges for detection systems. To tackle these challenges, this paper introduces a novel object detection method, dubbed the triple focus diffusion network (TFDNet). Firstly, the gradient convolution is introduced to construct the C2f-EIRM module, replacing the original C2f module, thereby enhancing the network’s capacity to extract edge information. Secondly, by leveraging the concept of the Asymptotic Feature Pyramid Network on the foundation of the Path Aggregation Network, the triple focus diffusion module structure is proposed to improve the network’s ability to fuse multi-scale features. Finally, the SPPF-ELA module employs an Efficient Local Attention mechanism to integrate multi-scale information, thereby significantly reducing the impact of background noise on detection accuracy. Experiments on the VisDrone 2021 dataset reveal that the average detection accuracy of the TFDNet algorithm reached 38.4%, which represents a 6.5% improvement over the original algorithm; similarly, its mAP50:90 performance has increased by 3.7%. Furthermore, on the UAVDT dataset, the TFDNet achieved a 3.3% enhancement in performance compared to the original algorithm. TFDNet, with a processing speed of 55.4 FPS, satisfies the real-time requirements for vehicle detection.

TFDNet:用于城市拥堵路段物体检测的三重聚焦扩散网络,具有精确的多尺度特征融合和实时能力

在拥堵的城市场景中进行车辆检测对于交通管制和安全管理至关重要。然而,在这种环境中,多尺度车辆的密集排列和遮挡给检测系统带来了相当大的挑战。为了应对这些挑战,本文介绍了一种新颖的物体检测方法,即三重聚焦扩散网络(TFDNet)。首先,引入梯度卷积来构建 C2f-EIRM 模块,取代原有的 C2f 模块,从而增强网络提取边缘信息的能力。其次,在路径聚合网络的基础上,利用渐近特征金字塔网络的概念,提出了三重焦点扩散模块结构,提高了网络融合多尺度特征的能力。最后,SPPF-ELA 模块采用高效局部关注机制来整合多尺度信息,从而显著降低背景噪声对检测精度的影响。在 VisDrone 2021 数据集上的实验表明,TFDNet 算法的平均检测准确率达到了 38.4%,比原始算法提高了 6.5%;同样,其 mAP50:90 性能也提高了 3.7%。此外,在 UAVDT 数据集上,TFDNet 的性能比原始算法提高了 3.3%。TFDNet 的处理速度为 55.4 FPS,满足了车辆检测的实时要求。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Journal of King Saud University-Computer and Information Sciences

COMPUTER SCIENCE, INFORMATION SYSTEMS-

CiteScore

10.50

自引率

8.70%

发文量

656

审稿时长

29 days

期刊介绍:

In 2022 the Journal of King Saud University - Computer and Information Sciences will become an author paid open access journal. Authors who submit their manuscript after October 31st 2021 will be asked to pay an Article Processing Charge (APC) after acceptance of their paper to make their work immediately, permanently, and freely accessible to all. The Journal of King Saud University Computer and Information Sciences is a refereed, international journal that covers all aspects of both foundations of computer and its practical applications.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: