A Scalable, Cloud-Based Workflow for Spectrally-Attributed ICESat-2 Bathymetry With Application to Benthic Habitat Mapping Using Deep Learning

Abstract

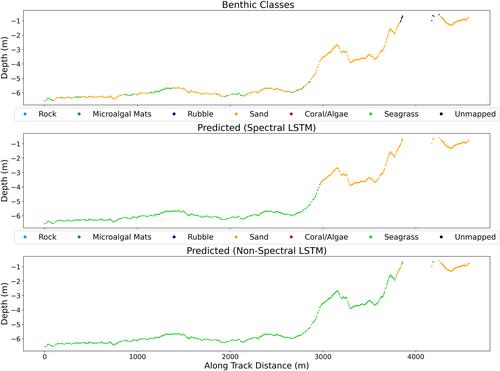

Since the 2018 launch of NASA's ICESat-2 satellite, numerous studies have documented the bathymetric measurement capabilities of the space-based laser altimeter. However, a commonly identified limitation of ICESat-2 bathymetric point clouds is that they lack accompanying spectral reflectance attributes, or even intensity values, which have been found useful for benthic habitat mapping with airborne bathymetric lidar. We present a novel method for extracting bathymetry from ICESat-2 data and automatically adding spectral reflectance values from Sentinel-2 imagery to each detected bathymetric point. This method, which leverages the cloud computing systems Google Earth Engine and NASA's SlideRule Earth, is ideally suited for “big data” projects with ICESat-2 data products. To demonstrate the scalability of our workflow, we collected 3,500 ICESat-2 segments containing approximately 1.4 million spectrally-attributed bathymetric points. We then used this data set to facilitate training of a deep recurrent neural network for classifying benthic habitats at the ICESat-2 photon level. We trained two identical models, one with and one without the spectral attributes, to investigate the benefits of fusing ICESat-2 photons with Sentinel-2. The results show an improvement in model performance of 18 percentage points, based on F1 score. The procedures and source code are publicly available and will enhance the value of the new ICESat-2 bathymetry data product, ATL24, which is scheduled for release in Fall 2024. These procedures may also be applicable to data from NASA's upcoming CASALS mission.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: