Understanding and mitigating the impact of race with adversarial autoencoders

IF 5.4

Q1 MEDICINE, RESEARCH & EXPERIMENTAL

引用次数: 0

Abstract

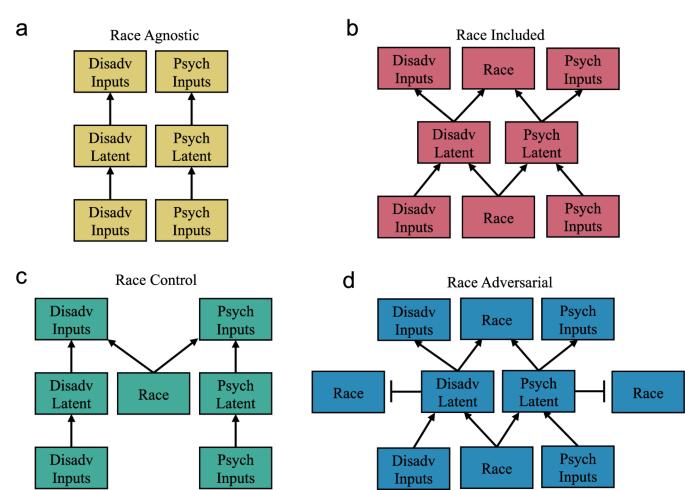

Artificial intelligence carries the risk of exacerbating some of our most challenging societal problems, but it also has the potential of mitigating and addressing these problems. The confounding effects of race on machine learning is an ongoing subject of research. This study aims to mitigate the impact of race on data-derived models, using an adversarial variational autoencoder (AVAE). In this study, race is a self-reported feature. Race is often excluded as an input variable, however, due to the high correlation between race and several other variables, race is still implicitly encoded in the data. We propose building a model that (1) learns a low dimensionality latent spaces, (2) employs an adversarial training procedure that ensure its latent space does not encode race, and (3) contains necessary information for reconstructing the data. We train the autoencoder to ensure the latent space does not indirectly encode race. In this study, AVAE successfully removes information about race from the latent space (AUC ROC = 0.5). In contrast, latent spaces constructed using other approaches still allow the reconstruction of race with high fidelity. The AVAE’s latent space does not encode race but conveys important information required to reconstruct the dataset. Furthermore, the AVAE’s latent space does not predict variables related to race (R2 = 0.003), while a model that includes race does (R2 = 0.08). Though we constructed a race-independent latent space, any variable could be similarly controlled. We expect AVAEs are one of many approaches that will be required to effectively manage and understand bias in ML. Computer models used in healthcare can sometimes be biased based on race, leading to unfair outcomes. Our study focuses on understanding and reducing the impact of self-reported race in computer models that learn from data. We use a model called an Adversarial Variational Autoencoder (AVAE), which helps ensure that the models don’t accidentally use race in their calculations. The AVAE technique creates a simplified version of the data, called a latent space, that leaves out race information but keeps other important details needed for accurate predictions. Our results show that this approach successfully removes race information from the models while still allowing them to work well. This method is one of many steps needed to address bias in computer learning and ensure fairer outcomes. Our findings highlight the importance of developing tools that can manage and understand bias, contributing to more equitable and trustworthy technology. Sarullo and Swamidass use an adversarial variational autoencoder (AVAE) to remove race information from computer models while retaining essential data for accurate predictions, effectively reducing bias. This approach highlights the importance of developing tools to manage bias, ensuring fairer and more trustworthy technology.

利用对抗式自动编码器了解和减轻种族的影响。

背景:人工智能有可能加剧我们面临的一些最具挑战性的社会问题,但也有可能缓解和解决这些问题。种族对机器学习的干扰效应是一个持续的研究课题。本研究旨在利用对抗变异自动编码器(AVAE)来减轻种族对数据衍生模型的影响。在本研究中,种族是一个自我报告的特征。种族通常被排除在输入变量之外,然而,由于种族与其他几个变量之间的高度相关性,种族仍然隐含在数据中:我们建议建立一个模型:(1)学习低维度的潜在空间;(2)采用对抗训练程序,确保其潜在空间不编码种族;(3)包含重构数据的必要信息。我们对自动编码器进行训练,以确保潜在空间不会间接编码种族:在这项研究中,AVAE 成功地从潜在空间中删除了种族信息(AUC ROC = 0.5)。相比之下,使用其他方法构建的潜在空间仍能高保真地重建种族信息。AVAE 的潜在空间没有对种族进行编码,但传达了重建数据集所需的重要信息。此外,AVAE 的潜在空间不能预测与种族有关的变量(R2 = 0.003),而包含种族的模型却能预测(R2 = 0.08):尽管我们构建了一个与种族无关的潜在空间,但任何变量都可以进行类似的控制。我们希望 AVAE 是有效管理和理解 ML 中偏差所需的众多方法之一。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: