Diverse non-homogeneous texture synthesis from a single exemplar

IF 2.5

4区 计算机科学

Q2 COMPUTER SCIENCE, SOFTWARE ENGINEERING

引用次数: 0

Abstract

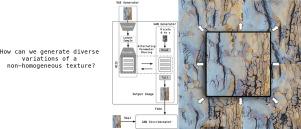

Capturing non-local, long range features present in non-homogeneous textures is difficult to achieve with existing techniques. We introduce a new training method and architecture for single-exemplar texture synthesis that combines a Generative Adversarial Network (GAN) and a Variational Autoencoder (VAE). In the proposed architecture, the combined networks share information during training via structurally identical, independent blocks, facilitating highly diverse texture variations from a single image exemplar. Supporting this training method, we also include a similarity loss term that further encourages diverse output while also improving the overall quality. Using our approach, it is possible to produce diverse results over the entire sample size taken from a single model that can be trained in approximately 15 min. We show that our approach obtains superior performance when compared to SOTA texture synthesis methods and single image GAN methods using standard diversity and quality metrics.

从单一范例中合成多样化非均质纹理

现有技术难以捕捉非同质纹理中的非局部、长距离特征。我们为单例纹理合成引入了一种新的训练方法和架构,它结合了生成对抗网络(GAN)和变异自动编码器(VAE)。在所提出的架构中,组合网络在训练过程中通过结构相同的独立块共享信息,从而促进单个图像示例的纹理变化高度多样化。为了支持这种训练方法,我们还加入了一个相似性损失项,在提高整体质量的同时,进一步鼓励多样化的输出。使用我们的方法,可以在大约 15 分钟的时间内,通过一个单一模型的训练,在整个样本大小上产生多样化的结果。我们的研究表明,与 SOTA 纹理合成方法和使用标准多样性和质量指标的单图像 GAN 方法相比,我们的方法具有更优越的性能。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Computers & Graphics-Uk

工程技术-计算机:软件工程

CiteScore

5.30

自引率

12.00%

发文量

173

审稿时长

38 days

期刊介绍:

Computers & Graphics is dedicated to disseminate information on research and applications of computer graphics (CG) techniques. The journal encourages articles on:

1. Research and applications of interactive computer graphics. We are particularly interested in novel interaction techniques and applications of CG to problem domains.

2. State-of-the-art papers on late-breaking, cutting-edge research on CG.

3. Information on innovative uses of graphics principles and technologies.

4. Tutorial papers on both teaching CG principles and innovative uses of CG in education.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: