Correlating measures of hierarchical structures in artificial neural networks with their performance

引用次数: 0

Abstract

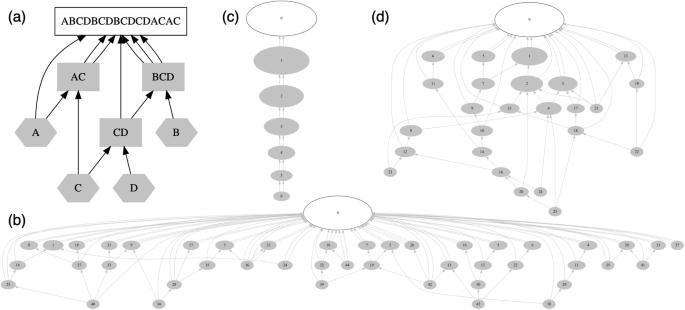

This study employs the recently developed Ladderpath approach, within the broader category of Algorithmic Information Theory (AIT), which characterizes the hierarchical and nested relationships among repeating substructures, to explore the structure-function relationship in neural networks, multilayer perceptrons (MLP), in particular. The metric order-rate η, derived from the approach, is a measure of structural orderliness: when η is in the middle range (around 0.5), the structure exhibits the richest hierarchical relationships, corresponding to the highest complexity. We hypothesize that the highest structural complexity correlates with optimal functionality. Our experiments support this hypothesis in several ways: networks with η values in the middle range show superior performance, and the training processes tend to naturally adjust η towards this range; additionally, starting neural networks with η values in this middle range appears to boost performance. Intriguingly, these findings align with observations in other distinct systems, including chemical molecules and protein sequences, hinting at a hidden regularity encapsulated by this theoretical framework.

人工神经网络中分层结构的测量方法与其性能的相关性

本研究在算法信息论(AIT)的大范畴内采用了最近开发的阶梯路径方法(Ladderpath approach),该方法描述了重复子结构之间的层次和嵌套关系,以探索神经网络(尤其是多层感知器(MLP))中的结构-功能关系。从该方法中得出的度量秩率 η 是结构有序性的一个度量:当 η 处于中间范围(约 0.5)时,结构表现出最丰富的层次关系,相当于最高的复杂性。我们假设,最高的结构复杂度与最佳功能相关。我们的实验从几个方面支持了这一假设:η 值处于中间范围的网络表现出更优越的性能,而训练过程往往会自然地将 η 调整到这一范围;此外,以 η 值处于中间范围的神经网络作为起点似乎也能提高性能。耐人寻味的是,这些发现与其他不同系统(包括化学分子和蛋白质序列)中的观察结果一致,暗示了这一理论框架所包含的隐藏规律性。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: