Introducing MagBERT: A language model for magnesium textual data mining and analysis

IF 15.8

1区 材料科学

Q1 METALLURGY & METALLURGICAL ENGINEERING

引用次数: 0

Abstract

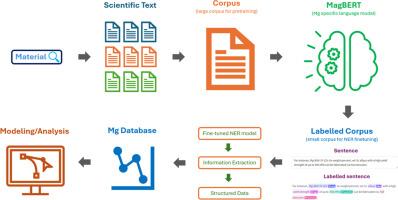

Magnesium (Mg) based materials hold immense potential for various applications due to their lightweight and high strength-to-weight ratio. However, to fully harness the potential of Mg alloys, structured analytics are essential to gain valuable insights from centuries of accumulated knowledge. Efficient information extraction from the vast corpus of scientific literature is crucial for this purpose. In this work, we introduce MagBERT, a BERT-based language model specifically trained for Mg-based materials. Utilizing a dataset of approximately 370,000 abstracts focused on Mg and its alloys, MagBERT is designed to understand the intricate details and specialized terminology of this domain. Through rigorous evaluation, we demonstrate the effectiveness of MagBERT for information extraction using a fine-tuned named entity recognition (NER) model, named MagNER. This NER model can extract mechanical, microstructural, and processing properties related to Mg alloys. For instance, we have created an Mg alloy dataset that includes properties such as ductility, yield strength, and ultimate tensile strength (UTS), along with standard alloy names. The introduction of MagBERT is a novel advancement in the development of Mg-specific language models, marking a significant milestone in the discovery of Mg alloys and textual information extraction. By making the pre-trained weights of MagBERT publicly accessible, we aim to accelerate research and innovation in the field of Mg-based materials through efficient information extraction and knowledge discovery.

介绍 MagBERT:用于镁文本数据挖掘和分析的语言模型

镁(Mg)基材料重量轻、强度重量比高,因此在各种应用领域具有巨大潜力。然而,要充分利用镁合金的潜力,必须进行结构化分析,才能从数百年积累的知识中获得有价值的见解。为此,从大量科学文献中高效提取信息至关重要。在这项工作中,我们介绍了 MagBERT,这是一种基于 BERT 的语言模型,专门针对镁基合金材料进行训练。MagBERT 利用一个包含约 370,000 篇有关镁及其合金的摘要的数据集,旨在理解该领域的复杂细节和专业术语。通过严格的评估,我们证明了 MagBERT 使用名为 MagNER 的微调命名实体识别(NER)模型进行信息提取的有效性。该 NER 模型可以提取与镁合金相关的机械、微结构和加工属性。例如,我们创建了一个镁合金数据集,其中包括延展性、屈服强度和极限拉伸强度(UTS)等属性以及标准合金名称。MagBERT 的推出是开发镁合金专用语言模型的一个新进展,标志着镁合金发现和文本信息提取领域的一个重要里程碑。通过公开MagBERT的预训练权重,我们旨在通过高效的信息提取和知识发现,加速镁基材料领域的研究和创新。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Journal of Magnesium and Alloys

Engineering-Mechanics of Materials

CiteScore

20.20

自引率

14.80%

发文量

52

审稿时长

59 days

期刊介绍:

The Journal of Magnesium and Alloys serves as a global platform for both theoretical and experimental studies in magnesium science and engineering. It welcomes submissions investigating various scientific and engineering factors impacting the metallurgy, processing, microstructure, properties, and applications of magnesium and alloys. The journal covers all aspects of magnesium and alloy research, including raw materials, alloy casting, extrusion and deformation, corrosion and surface treatment, joining and machining, simulation and modeling, microstructure evolution and mechanical properties, new alloy development, magnesium-based composites, bio-materials and energy materials, applications, and recycling.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: