Resolving Collisions in Dense 3D Crowd Animations

IF 4.7

2区 化学

Q2 MATERIALS SCIENCE, MULTIDISCIPLINARY

引用次数: 0

Abstract

We propose a novel contact-aware method to synthesize highly-dense 3D crowds of animated characters. Existing methods animate crowds by, first, computing the 2D global motion approximating subjects as 2D particles and, then, introducing individual character motions without considering their surroundings. This creates the illusion of a 3D crowd, but, with density, characters frequently intersect each other since character-to-character contact is not modeled. We tackle this issue and propose a general method that considers any crowd animation and resolves existing residual collisions. To this end, we take a physics-based approach to model contacts between articulated characters. This enables the real-time synthesis of 3D high-density crowds with dozens of individuals that do not intersect each other, producing an unprecedented level of physical correctness in animations. Under the hood, we model each individual using a parametric human body incorporating a set of 3D proxies to approximate their volume. We then build a large system of articulated rigid bodies, and use an efficient physics-based approach to solve for individual body poses that do not collide with each other while maintaining the overall motion of the crowd. We first validate our approach objectively and quantitatively. We then explore relations between physical correctness and perceived realism based on an extensive user study that evaluates the relevance of solving contacts in dense crowds. Results demonstrate that our approach outperforms existing methods for crowd animation in terms of geometric accuracy and overall realism.解决密集 3D 人群动画中的碰撞问题

我们提出了一种新颖的接触感知方法,用于合成高密度的三维动画人物群。现有的人群动画制作方法首先是计算二维全局运动,将主体近似为二维粒子,然后在不考虑周围环境的情况下引入单个角色的运动。这就造成了三维人群的假象,但随着密度的增加,由于没有模拟角色与角色之间的接触,角色之间经常会相互交叉。针对这一问题,我们提出了一种通用方法,可以考虑任何人群动画,并解决现有的残余碰撞问题。为此,我们采用基于物理的方法来模拟铰接角色之间的接触。这样就能实时合成由数十个互不相交的个体组成的三维高密度人群,使动画的物理正确性达到前所未有的水平。在引擎盖下,我们使用参数化人体为每个人建模,并结合一组三维代理来近似他们的体积。然后,我们构建了一个大型铰接刚体系统,并使用高效的物理方法来解决单个人体姿势的问题,使其在保持人群整体运动的同时不会相互碰撞。我们首先客观、定量地验证了我们的方法。然后,我们基于一项广泛的用户研究,探索物理正确性与感知真实性之间的关系,该研究评估了在密集人群中解决接触问题的相关性。结果表明,我们的方法在几何精度和整体逼真度方面优于现有的人群动画制作方法。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

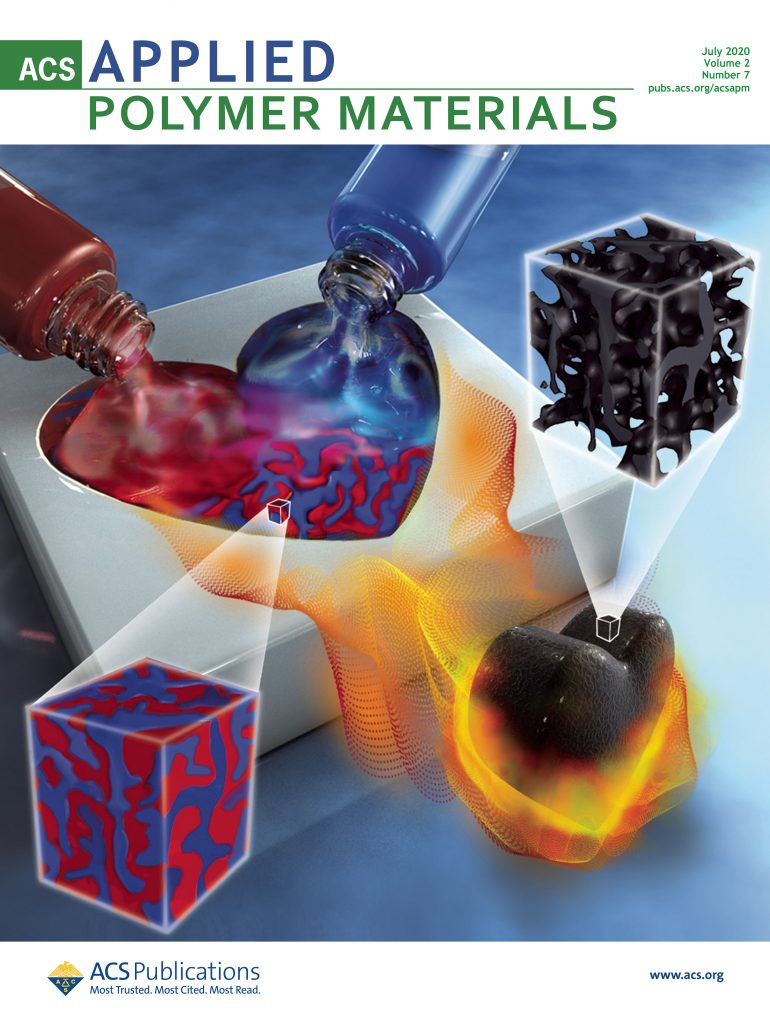

ACS Applied Polymer Materials

Multiple-

CiteScore

7.20

自引率

6.00%

发文量

810

期刊介绍:

ACS Applied Polymer Materials is an interdisciplinary journal publishing original research covering all aspects of engineering, chemistry, physics, and biology relevant to applications of polymers.

The journal is devoted to reports of new and original experimental and theoretical research of an applied nature that integrates fundamental knowledge in the areas of materials, engineering, physics, bioscience, polymer science and chemistry into important polymer applications. The journal is specifically interested in work that addresses relationships among structure, processing, morphology, chemistry, properties, and function as well as work that provide insights into mechanisms critical to the performance of the polymer for applications.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: