PIM GPT a hybrid process in memory accelerator for autoregressive transformers

引用次数: 0

Abstract

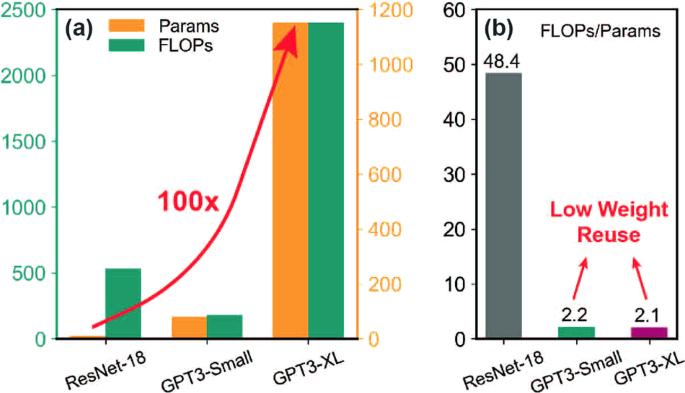

Decoder-only Transformer models such as Generative Pre-trained Transformers (GPT) have demonstrated exceptional performance in text generation by autoregressively predicting the next token. However, the efficiency of running GPT on current hardware systems is bounded by low compute-to-memory-ratio and high memory access. In this work, we propose a Process-in-memory (PIM) GPT accelerator, PIM-GPT, which achieves end-to-end acceleration of GPT inference with high performance and high energy efficiency. PIM-GPT leverages DRAM-based PIM designs for executing multiply-accumulate (MAC) operations directly in the DRAM chips, eliminating the need to move matrix data off-chip. Non-linear functions and data communication are supported by an application specific integrated chip (ASIC). At the software level, mapping schemes are designed to maximize data locality and computation parallelism. Overall, PIM-GPT achieves 41 − 137 × , 631 − 1074 × speedup and 123 − 383 × , 320 − 602 × energy efficiency over GPU and CPU baseline on 8 GPT models with up to 1.4 billion parameters.

PIM GPT 自回归变压器内存混合过程加速器

纯解码器变换器模型,如生成式预训练变换器(GPT),通过自回归预测下一个标记,在文本生成方面表现出了卓越的性能。然而,在当前的硬件系统上运行 GPT 的效率受限于低计算内存比和高内存访问。在这项工作中,我们提出了一种内存进程(PIM)GPT 加速器 PIM-GPT,它能以高性能和高能效实现 GPT 推理的端到端加速。PIM-GPT 利用基于 DRAM 的 PIM 设计,直接在 DRAM 芯片中执行乘积 (MAC) 运算,无需将矩阵数据移至芯片外。非线性功能和数据通信由专用集成芯片(ASIC)支持。在软件层面,映射方案旨在最大限度地提高数据局部性和计算并行性。总体而言,与 GPU 和 CPU 相比,PIM-GPT 在具有多达 14 亿个参数的 8 个 GPT 模型上实现了 41 - 137 ×、631 - 1074 × 的速度提升和 123 - 383 ×、320 - 602 × 的能效。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: