SimPLE, a visuotactile method learned in simulation to precisely pick, localize, regrasp, and place objects

IF 27.5

1区 计算机科学

Q1 ROBOTICS

引用次数: 0

Abstract

Existing robotic systems have a tension between generality and precision. Deployed solutions for robotic manipulation tend to fall into the paradigm of one robot solving a single task, lacking “precise generalization,” or the ability to solve many tasks without compromising on precision. This paper explores solutions for precise and general pick and place. In precise pick and place, or kitting, the robot transforms an unstructured arrangement of objects into an organized arrangement, which can facilitate further manipulation. We propose SimPLE (Simulation to Pick Localize and placE) as a solution to precise pick and place. SimPLE learns to pick, regrasp, and place objects given the object’s computer-aided design model and no prior experience. We developed three main components: task-aware grasping, visuotactile perception, and regrasp planning. Task-aware grasping computes affordances of grasps that are stable, observable, and favorable to placing. The visuotactile perception model relies on matching real observations against a set of simulated ones through supervised learning to estimate a distribution of likely object poses. Last, we computed a multistep pick-and-place plan by solving a shortest-path problem on a graph of hand-to-hand regrasps. On a dual-arm robot equipped with visuotactile sensing, SimPLE demonstrated pick and place of 15 diverse objects. The objects spanned a wide range of shapes, and SimPLE achieved successful placements into structured arrangements with 1-mm clearance more than 90% of the time for six objects and more than 80% of the time for 11 objects.

SimPLE,一种在模拟中学习到的可视触觉方法,用于精确拾取、定位、重新抓取和放置物体。

现有的机器人系统在通用性和精确性之间存在矛盾。已部署的机器人操纵解决方案往往陷入一个机器人解决单一任务的模式,缺乏 "精确通用化 "或在不影响精度的情况下解决多项任务的能力。本文探讨了精确拾放和通用拾放的解决方案。在精确拾放或拼装过程中,机器人将无序排列的物体转化为有序排列,从而便于进一步操作。我们提出了 SimPLE(模拟拾取、定位和放置)作为精确拾放的解决方案。SimPLE 可在物体的计算机辅助设计模型和无经验的情况下学习拾取、重新抓取和放置物体。我们开发了三个主要组件:任务感知抓取、视觉触觉感知和再抓取规划。任务感知抓取可计算出稳定、可观察和有利于放置的抓取能力。视觉触觉感知模型依赖于通过监督学习将真实观察结果与一组模拟观察结果相匹配,从而估算出可能的物体姿势分布。最后,我们通过求解手与手之间重置图上的最短路径问题,计算出一个多步骤拾放计划。在一个配备了视觉传感功能的双臂机器人上,SimPLE 演示了 15 种不同物体的拾取和放置。这些物体形状各异,SimPLE 在 6 个物体上 90% 以上的时间内成功将其放置到间隙为 1 毫米的结构化排列中,在 11 个物体上 80% 以上的时间内成功将其放置到间隙为 1 毫米的结构化排列中。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

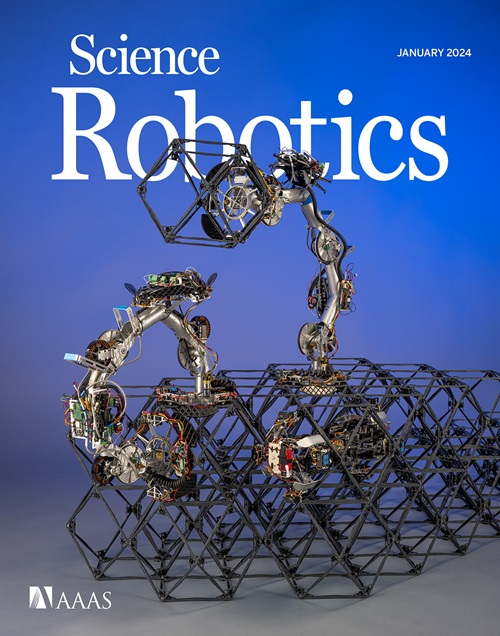

来源期刊

Science Robotics

Mathematics-Control and Optimization

CiteScore

30.60

自引率

2.80%

发文量

83

期刊介绍:

Science Robotics publishes original, peer-reviewed, science- or engineering-based research articles that advance the field of robotics. The journal also features editor-commissioned Reviews. An international team of academic editors holds Science Robotics articles to the same high-quality standard that is the hallmark of the Science family of journals.

Sub-topics include: actuators, advanced materials, artificial Intelligence, autonomous vehicles, bio-inspired design, exoskeletons, fabrication, field robotics, human-robot interaction, humanoids, industrial robotics, kinematics, machine learning, material science, medical technology, motion planning and control, micro- and nano-robotics, multi-robot control, sensors, service robotics, social and ethical issues, soft robotics, and space, planetary and undersea exploration.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: