Towards equitable AI in oncology

IF 81.1

1区 医学

Q1 ONCOLOGY

引用次数: 0

Abstract

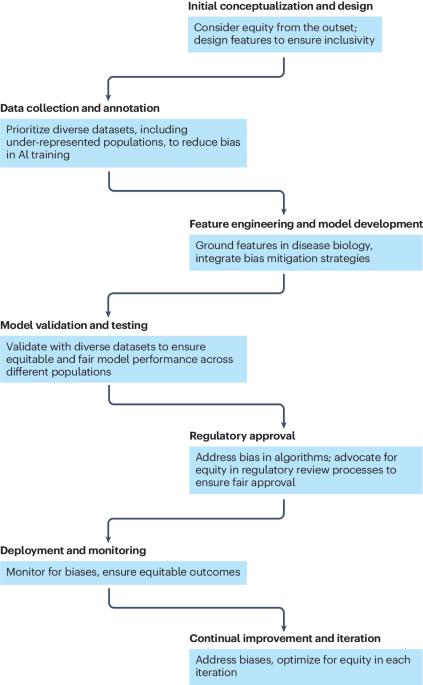

Artificial intelligence (AI) stands at the threshold of revolutionizing clinical oncology, with considerable potential to improve early cancer detection and risk assessment, and to enable more accurate personalized treatment recommendations. However, a notable imbalance exists in the distribution of the benefits of AI, which disproportionately favour those living in specific geographical locations and in specific populations. In this Perspective, we discuss the need to foster the development of equitable AI tools that are both accurate in and accessible to a diverse range of patient populations, including those in low-income to middle-income countries. We also discuss some of the challenges and potential solutions in attaining equitable AI, including addressing the historically limited representation of diverse populations in existing clinical datasets and the use of inadequate clinical validation methods. Additionally, we focus on extant sources of inequity including the type of model approach (such as deep learning, and feature engineering-based methods), the implications of dataset curation strategies, the need for rigorous validation across a variety of populations and settings, and the risk of introducing contextual bias that comes with developing tools predominantly in high-income countries. Artificial intelligence (AI) has the potential to dramatically change several aspects of oncology including diagnosis, early detection and treatment-related decision making. However, many of the underlying algorithms have been or are being trained on datasets that do not necessarily reflect the diversity of the target population. For this, and other reasons, many AI tools might not be suitable for application in less economically developed countries and/or in patients of certain ethnicities. In this Perspective, the authors discuss possible sources of inequity in AI development, and how to ensure the development and implementation of equitable AI tools for use in patients with cancer.

在肿瘤学领域实现公平的人工智能

人工智能(AI)正处于彻底改变临床肿瘤学的临界点,在改善早期癌症检测和风险评估以及提供更准确的个性化治疗建议方面具有巨大潜力。然而,人工智能带来的好处在分配上存在着明显的不平衡,对生活在特定地理位置和特定人群的人来说,人工智能的好处过多。在本《视角》中,我们将讨论促进公平人工智能工具开发的必要性,这些工具既要在不同的患者群体中准确使用,又要能为他们所使用,包括中低收入国家的患者。我们还讨论了实现公平人工智能所面临的一些挑战和潜在的解决方案,包括解决现有临床数据集中不同人群的代表性历来有限的问题,以及使用不适当的临床验证方法的问题。此外,我们还关注不公平的现有来源,包括模型方法的类型(如深度学习和基于特征工程的方法)、数据集整理策略的影响、在不同人群和环境中进行严格验证的必要性,以及主要在高收入国家开发工具所带来的引入背景偏见的风险。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

CiteScore

99.40

自引率

0.40%

发文量

114

审稿时长

6-12 weeks

期刊介绍:

Nature Reviews publishes clinical content authored by internationally renowned clinical academics and researchers, catering to readers in the medical sciences at postgraduate levels and beyond. Although targeted at practicing doctors, researchers, and academics within specific specialties, the aim is to ensure accessibility for readers across various medical disciplines. The journal features in-depth Reviews offering authoritative and current information, contextualizing topics within the history and development of a field. Perspectives, News & Views articles, and the Research Highlights section provide topical discussions, opinions, and filtered primary research from diverse medical journals.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: