Quantifying dysmorphologies of the neurocranium using artificial neural networks

Abstract

Background

Craniosynostosis, a congenital condition characterized by the premature fusion of cranial sutures, necessitates objective methods for evaluating cranial morphology to enhance patient treatment. Current subjective assessments often lead to inconsistent outcomes. This study introduces a novel, quantitative approach to classify craniosynostosis and measure its severity.

Methods

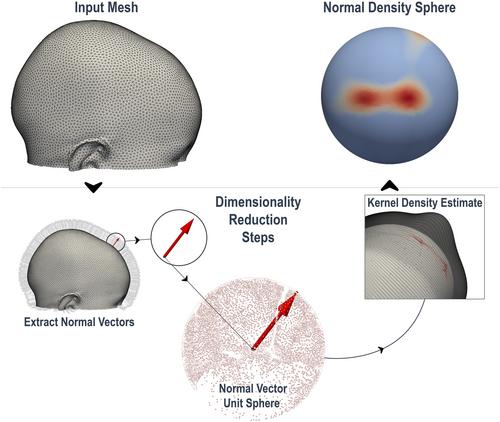

An artificial neural network was trained to classify normocephalic, trigonocephalic, and scaphocephalic head shapes based on a publicly available dataset of synthetic 3D head models. Each 3D model was converted into a low-dimensional shape representation based on the distribution of normal vectors, which served as the input for the neural network, ensuring complete patient anonymity and invariance to geometric size and orientation. Explainable AI methods were utilized to highlight significant features when making predictions. Additionally, the Feature Prominence (FP) score was introduced, a novel metric that captures the prominence of distinct shape characteristics associated with a given class. Its relationship with clinical severity scores was examined using the Spearman Rank Correlation Coefficient.

Results

The final model achieved excellent test accuracy in classifying the different cranial shapes from their low-dimensional representation. Attention maps indicated that the network's attention was predominantly directed toward the parietal and temporal regions, as well as toward the region signifying vertex depression in scaphocephaly. In trigonocephaly, features around the temples were most pronounced. The FP score showed a strong positive monotonic relationship with clinical severity scores in both scaphocephalic (ρ = 0.83, p < 0.001) and trigonocephalic (ρ = 0.64, p < 0.001) models. Visual assessments further confirmed that as FP values rose, phenotypic severity became increasingly evident.

Conclusion

This study presents an innovative and accessible AI-based method for quantifying cranial shape that mitigates the need for adjustments due to age-specific size variations or differences in the spatial orientation of the 3D images, while ensuring complete patient privacy. The proposed FP score strongly correlates with clinical severity scores and has the potential to aid in clinical decision-making and facilitate multi-center collaborations. Future work will focus on validating the model with larger patient datasets and exploring the potential of the FP score for broader applications. The publicly available source code facilitates easy implementation, aiming to advance craniofacial care and research.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: