Method to assess the trustworthiness of machine coding at scale

IF 2.6

2区 教育学

Q1 EDUCATION & EDUCATIONAL RESEARCH

Physical Review Physics Education Research

Pub Date : 2024-03-06

DOI:10.1103/physrevphyseducres.20.010113

引用次数: 0

Abstract

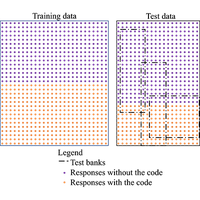

Physics education researchers are interested in using the tools of machine learning and natural language processing to make quantitative claims from natural language and text data, such as open-ended responses to survey questions. The aspiration is that this form of machine coding may be more efficient and consistent than human coding, allowing much larger and broader datasets to be analyzed than is practical with human coders. Existing work that uses these tools, however, does not investigate norms that allow for trustworthy quantitative claims without full reliance on cross-checking with human coding, which defeats the purpose of using these automated tools. Here we propose a four-part method for making such claims with supervised natural language processing: evaluating a trained model, calculating statistical uncertainty, calculating systematic uncertainty from the trained algorithm, and calculating systematic uncertainty from novel data sources. We provide evidence for this method using data from two distinct short response survey questions with two distinct coding schemes. We also provide a real-world example of using these practices to machine code a dataset unseen by human coders. We offer recommendations to guide physics education researchers who may use machine-coding methods in the future.

大规模评估机器编码可信度的方法

物理教育研究人员对使用机器学习和自然语言处理工具从自然语言和文本数据(如对调查问题的开放式回答)中得出定量结论很感兴趣。他们的期望是,这种机器编码形式可能比人工编码更有效、更一致,从而可以分析更大、更广的数据集,而人工编码则不切实际。然而,使用这些工具的现有工作并没有研究无需完全依赖与人工编码进行交叉检查就能获得可信定量结果的规范,这有违使用这些自动化工具的初衷。在此,我们提出了一种由四个部分组成的方法,用于利用有监督的自然语言处理技术做出此类声明:评估训练有素的模型、计算统计不确定性、计算来自训练有素算法的系统不确定性,以及计算来自新数据源的系统不确定性。我们使用两种不同编码方案的两种不同简答调查问题的数据为这一方法提供了证据。我们还提供了一个使用这些方法对人类编码员未见过的数据集进行机器编码的实际例子。我们为将来可能使用机器编码方法的物理教育研究人员提供了指导建议。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Physical Review Physics Education Research

Social Sciences-Education

CiteScore

5.70

自引率

41.90%

发文量

84

审稿时长

32 weeks

期刊介绍:

PRPER covers all educational levels, from elementary through graduate education. All topics in experimental and theoretical physics education research are accepted, including, but not limited to:

Educational policy

Instructional strategies, and materials development

Research methodology

Epistemology, attitudes, and beliefs

Learning environment

Scientific reasoning and problem solving

Diversity and inclusion

Learning theory

Student participation

Faculty and teacher professional development

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: