Constructing and meta-evaluating state-aware evaluation metrics for interactive search systems

IF 1.9

3区 计算机科学

Q3 COMPUTER SCIENCE, INFORMATION SYSTEMS

引用次数: 0

Abstract

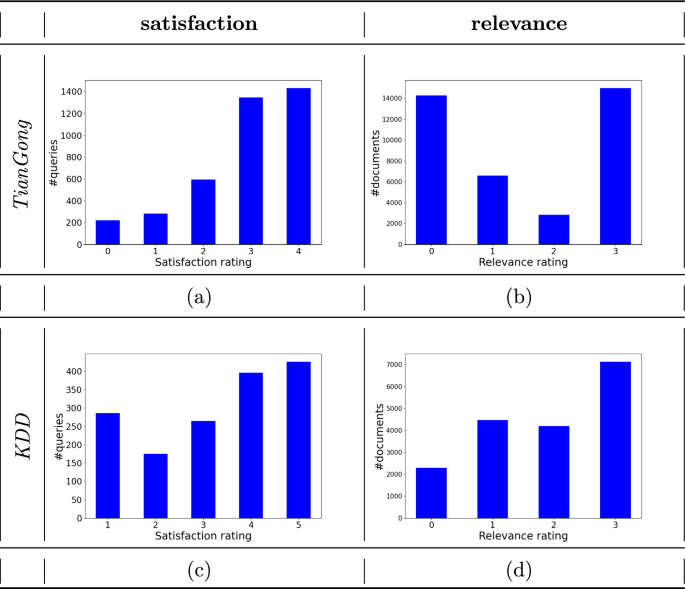

Abstract Evaluation metrics such as precision, recall and normalized discounted cumulative gain have been widely applied in ad hoc retrieval experiments. They have facilitated the assessment of system performance in various topics over the past decade. However, the effectiveness of such metrics in capturing users’ in-situ search experience, especially in complex search tasks that trigger interactive search sessions, is limited. To address this challenge, it is necessary to adaptively adjust the evaluation strategies of search systems to better respond to users’ changing information needs and evaluation criteria. In this work, we adopt a taxonomy of search task states that a user goes through in different scenarios and moments of search sessions, and perform a meta-evaluation of existing metrics to better understand their effectiveness in measuring user satisfaction. We then built models for predicting task states behind queries based on in-session signals. Furthermore, we constructed and meta-evaluated new state-aware evaluation metrics. Our analysis and experimental evaluation are performed on two datasets collected from a field study and a laboratory study, respectively. Results demonstrate that the effectiveness of individual evaluation metrics varies across task states. Meanwhile, task states can be detected from in-session signals. Our new state-aware evaluation metrics could better reflect in-situ user satisfaction than an extensive list of the widely used measures we analyzed in this work in certain states. Findings of our research can inspire the design and meta-evaluation of user-centered adaptive evaluation metrics, and also shed light on the development of state-aware interactive search systems.

构建和元评估交互式搜索系统的状态感知评估指标

摘要精密度、查全率和归一化折现累积增益等评价指标在自组织检索实验中得到了广泛应用。在过去十年中,它们促进了对不同主题的系统性能的评估。然而,这些指标在捕捉用户的原位搜索体验方面的有效性是有限的,特别是在触发交互式搜索会话的复杂搜索任务中。为了应对这一挑战,有必要自适应地调整搜索系统的评价策略,以更好地响应用户不断变化的信息需求和评价标准。在这项工作中,我们采用了用户在搜索会话的不同场景和时刻经历的搜索任务状态的分类法,并对现有指标进行了元评估,以更好地了解它们在衡量用户满意度方面的有效性。然后,我们建立了基于会话内信号的模型来预测查询背后的任务状态。此外,我们构建并元评估了新的状态感知评估指标。我们的分析和实验评估分别对从实地研究和实验室研究中收集的两个数据集进行。结果表明,单个评估指标的有效性因任务状态而异。同时,可以从会话信号中检测任务状态。我们新的状态感知评估指标可以更好地反映现场用户满意度,而不是我们在某些状态下分析的广泛使用的测量方法的广泛列表。我们的研究结果可以启发以用户为中心的自适应评价指标的设计和元评价,也可以为状态感知交互式搜索系统的开发提供启示。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Information Retrieval Journal

工程技术-计算机:信息系统

CiteScore

6.20

自引率

0.00%

发文量

17

审稿时长

13.5 months

期刊介绍:

The journal provides an international forum for the publication of theory, algorithms, analysis and experiments across the broad area of information retrieval. Topics of interest include search, indexing, analysis, and evaluation for applications such as the web, social and streaming media, recommender systems, and text archives. This includes research on human factors in search, bridging artificial intelligence and information retrieval, and domain-specific search applications.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: