机器人心理理论与逆向心理学

IF 5.5

Q2 ROBOTICS

引用次数: 1

摘要

心理理论(ToM)对应于人类推断他人欲望、信仰和意图的能力。习得ToM技能对于实现机器人与人之间的自然互动至关重要。ToM的一个核心组件是对错误信念进行归因的能力。在本文中,一个协作机器人试图帮助一个人类伙伴与另一个人进行基于信任的纸牌游戏。机器人通过强化学习来推断其伙伴对机器人决策系统的信任。机器人ToM指的是能够隐式地预测人类合作者的策略,并将预测注入其最优决策模型中,以获得更好的团队绩效。在我们的实验中,机器人在人类伙伴不信任它的时候进行学习,并给出最优策略建议,以确保团队绩效的有效性。有趣的发现是,当信任度较低时,最佳机器人策略试图对人类合作者使用逆向心理。这一发现将为具有人类伙伴的可信赖机器人决策模型的研究提供指导。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Robot Theory of Mind with Reverse Psychology

Theory of mind (ToM) corresponds to the human ability to infer other people's desires, beliefs, and intentions. Acquisition of ToM skills is crucial to obtain a natural interaction between robots and humans. A core component of ToM is the ability to attribute false beliefs. In this paper, a collaborative robot tries to assist a human partner who plays a trust-based card game against another human. The robot infers its partner's trust in the robot's decision system via reinforcement learning. Robot ToM refers to the ability to implicitly anticipate the human collaborator's strategy and inject the prediction into its optimal decision model for a better team performance. In our experiments, the robot learns when its human partner does not trust the robot and consequently gives recommendations in its optimal policy to ensure the effectiveness of team performance. The interesting finding is that the optimal robotic policy attempts to use reverse psychology on its human collaborator when trust is low. This finding will provide guidance for the study of a trustworthy robot decision model with a human partner in the loop.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

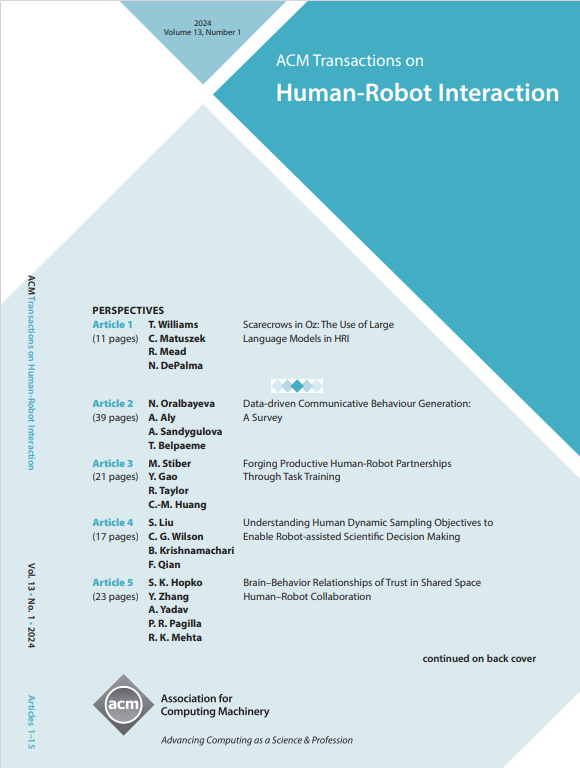

来源期刊

ACM Transactions on Human-Robot Interaction

Computer Science-Artificial Intelligence

CiteScore

7.70

自引率

5.90%

发文量

65

期刊介绍:

ACM Transactions on Human-Robot Interaction (THRI) is a prestigious Gold Open Access journal that aspires to lead the field of human-robot interaction as a top-tier, peer-reviewed, interdisciplinary publication. The journal prioritizes articles that significantly contribute to the current state of the art, enhance overall knowledge, have a broad appeal, and are accessible to a diverse audience. Submissions are expected to meet a high scholarly standard, and authors are encouraged to ensure their research is well-presented, advancing the understanding of human-robot interaction, adding cutting-edge or general insights to the field, or challenging current perspectives in this research domain.

THRI warmly invites well-crafted paper submissions from a variety of disciplines, encompassing robotics, computer science, engineering, design, and the behavioral and social sciences. The scholarly articles published in THRI may cover a range of topics such as the nature of human interactions with robots and robotic technologies, methods to enhance or enable novel forms of interaction, and the societal or organizational impacts of these interactions. The editorial team is also keen on receiving proposals for special issues that focus on specific technical challenges or that apply human-robot interaction research to further areas like social computing, consumer behavior, health, and education.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: