基于多模态分析和说话人嵌入的小组讨论人格特征估计

IF 2.1

3区 计算机科学

Q3 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

摘要

本文章由计算机程序翻译,如有差异,请以英文原文为准。

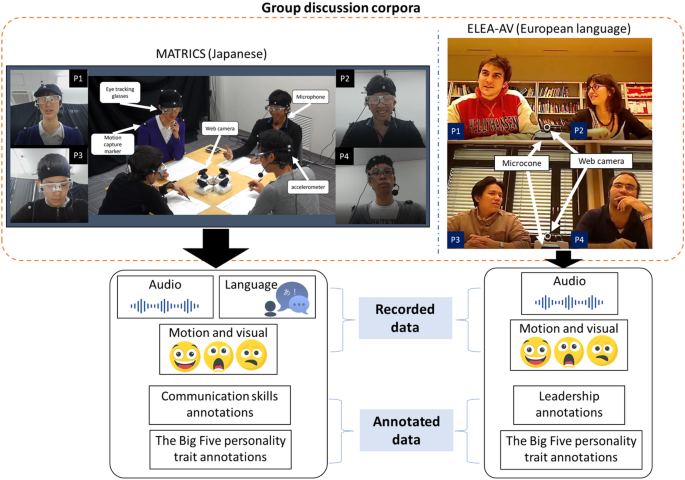

Personality trait estimation in group discussions using multimodal analysis and speaker embedding

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Journal on Multimodal User Interfaces

COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE-COMPUTER SCIENCE, CYBERNETICS

CiteScore

6.90

自引率

3.40%

发文量

12

审稿时长

>12 weeks

期刊介绍:

The Journal of Multimodal User Interfaces publishes work in the design, implementation and evaluation of multimodal interfaces. Research in the domain of multimodal interaction is by its very essence a multidisciplinary area involving several fields including signal processing, human-machine interaction, computer science, cognitive science and ergonomics. This journal focuses on multimodal interfaces involving advanced modalities, several modalities and their fusion, user-centric design, usability and architectural considerations. Use cases and descriptions of specific application areas are welcome including for example e-learning, assistance, serious games, affective and social computing, interaction with avatars and robots.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: