基于双尺度自适应注意力的视觉转换器,迭代改进多焦点图像融合的清晰度和一致性

IF 8

2区 计算机科学

Q1 AUTOMATION & CONTROL SYSTEMS

Engineering Applications of Artificial Intelligence

Pub Date : 2025-10-22

DOI:10.1016/j.engappai.2025.112777

引用次数: 0

摘要

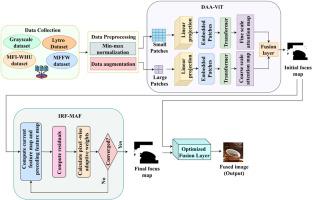

多焦点图像融合(Multi-focus Image Fusion, MFIF)在将多幅源图像的聚焦区域融合成一幅全聚焦图像方面发挥了重要作用。然而,现有的方法在保持全局空间一致性和清晰的细节方面存在局限性。为了克服这些局限性,提出了一种基于双尺度自适应注意力的视觉转换器(DAA-ViT)模型,该模型集成了精细尺度和粗尺度注意力,旨在保持局部高分辨率信息和结构一致性。此外,引入了迭代细化融合(IRF),通过多次迭代来细化焦点边界,以增强整体图像清晰度,同时减少融合伪影和焦点选择错误。特别是,这种基于人工智能(AI)的方法在深度层次不一致的复杂场景中效率高,适用于遥感和医学图像处理等应用。多个基准数据集的实验结果表明,该方法的互信息(MI)为8.9671,结构相似指数度量(SSIM)为0.9211,峰值信噪比(PSNR)为36.728 dB,下均方根误差(RMSE)为1.5482,优于现有方法。与现有的Swin变压器和卷积神经网络(STCU-Net)模型相比,该模型的PSNR提高了2.65%,MI提高了1.99%,结构相似指数测量提高了1.11%,RMSE降低了5.13%。这些发现证明了基于人工智能的融合策略在提供高质量全焦图像方面的效率,并强调了其在医学成像和遥感处理中的应用。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Dual-scale adaptive attention-based Vision transformer with iterative refinement for clarity and consistency in multi-focus image fusion

Multi-focus Image Fusion (MFIF) has become a prominent role in combining focused regions of several source images into a single all-in-focus fused image. However, existing approaches have the limitation of maintaining global spatial coherence and sharp details. To overcome these limitations, the Dual-Scale Adaptive Attention-Based Vision Transformer (DAA-ViT) model is proposed, which integrates fine-scale and coarse-scale attention, with the aim of maintaining local high-resolution information along with structural coherence. Additionally, an Iterative Refinement Fusion (IRF) is introduced to refine focus boundaries through multiple iterations for enhancing overall image definition, while mitigating fusion artifacts and focus selection errors. Especially, this Artificial Intelligence (AI)-based approach is efficient in complex scenes with inconsistent depth levels, which is suitable for applications like remote sensing and medical image processing. Experimental results of several benchmark datasets demonstrate that the proposed method attains better results than existing methods with a Mutual Information (MI) of 8.9671, Structural Similarity Index Measure (SSIM) of 0.9211, Peak Signal-To-Noise Ratio (PSNR) of 36.728 dB, and Lower Root Mean Square Error (RMSE) of 1.5482. Compared to the existing Swin Transformer and Convolutional Neural Network (STCU-Net) model, the proposed model attains 2.65 % improvement in PSNR, 1.99 % improvement in MI, 1.11 % improvement in Structural Similarity Index Measure, and 5.13 % reduction in RMSE. These findings demonstrate the efficiency of AI-based fusion strategies in delivering high-quality all-in-focus images and emphasize their applications in medical imaging and remote sensing processing.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Engineering Applications of Artificial Intelligence

工程技术-工程:电子与电气

CiteScore

9.60

自引率

10.00%

发文量

505

审稿时长

68 days

期刊介绍:

Artificial Intelligence (AI) is pivotal in driving the fourth industrial revolution, witnessing remarkable advancements across various machine learning methodologies. AI techniques have become indispensable tools for practicing engineers, enabling them to tackle previously insurmountable challenges. Engineering Applications of Artificial Intelligence serves as a global platform for the swift dissemination of research elucidating the practical application of AI methods across all engineering disciplines. Submitted papers are expected to present novel aspects of AI utilized in real-world engineering applications, validated using publicly available datasets to ensure the replicability of research outcomes. Join us in exploring the transformative potential of AI in engineering.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: