CrossModal-CLIP:一种用于鲁棒网络流量异常检测的新型多模态对比学习框架

IF 4.6

2区 计算机科学

Q1 COMPUTER SCIENCE, HARDWARE & ARCHITECTURE

引用次数: 0

摘要

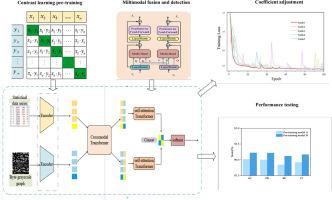

互联网连接设备的快速扩散扩大了在线活动,但也加剧了网络威胁的复杂性。传统的基于统计和原始字节分析的方法往往不能充分捕捉网络流量的全面行为,导致潜在的信息丢失。本文提出了一种基于跨模态对比学习的网络异常检测新方法。通过有效地融合交通数据的中间“多模态”表示——字节灰度图像和统计序列——通过对比学习,我们的方法增强了交通表示的鲁棒性。使用跨模态变压器编码器进行融合增强了这种表现,解决了传统方法的局限性。在对比学习中,设计了一个动态增加的温度系数来调整预训练模型。此外,利用自监督对比学习减少了对标记样本的依赖,同时增强了特征提取能力。在多个真实数据集上的大量实验验证了我们的方法的有效性,与现有方法相比,我们的方法在召回率和精度方面有了显着提高。此外,通过跨场景对比学习的不变性机制,我们还将预训练模型应用于加密环境中,探索其泛化性能。本文章由计算机程序翻译,如有差异,请以英文原文为准。

CrossModal-CLIP: A novel multimodal contrastive learning framework for robust network traffic anomaly detection

The rapid proliferation of Internet-connected devices has amplified online activities but also escalated the complexity of network threats. Traditional methods relying on statistical and raw byte-based analysis often inadequately capture comprehensive behaviors of network traffic, leading to potential information loss. In this article, a novel method for network anomaly detection using cross-modal contrastive learning is proposed. By effectively fusing intermediate “multimodal” representation of traffic data-byte grayscale images and statistical sequences-via contrastive learning, our method enhances the robustness of traffic representation. Using a cross-modal Transformer encoder for fusion strengthens this representation, addressing the limitations of traditional methods. In contrastive learning, a dynamically increasing temperature coefficient is designed to adjust the pre-training model. Additionally, leveraging self-supervised contrastive learning reduces reliance on labeled samples while enhancing feature extraction capabilities. Extensive experiments on multiple real datasets validate the effectiveness of our method, demonstrating excellent performance with significant improvements in recall and precision compared to existing approaches. In addition, by the invariance mechanism of contrastive learning across scenarios, we have also applied the pre-trained model to the encrypted environment to explore the generalization performance.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Computer Networks

工程技术-电信学

CiteScore

10.80

自引率

3.60%

发文量

434

审稿时长

8.6 months

期刊介绍:

Computer Networks is an international, archival journal providing a publication vehicle for complete coverage of all topics of interest to those involved in the computer communications networking area. The audience includes researchers, managers and operators of networks as well as designers and implementors. The Editorial Board will consider any material for publication that is of interest to those groups.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: