MPCM-RRG:放射学报告生成的多模式快速协作机制。

IF 4.5

2区 医学

Q2 COMPUTER SCIENCE, INTERDISCIPLINARY APPLICATIONS

引用次数: 0

摘要

医学报告生成任务涉及从医学图像中自动创建描述性文本报告,目的是减轻医生的工作量,提高诊断效率。然而,尽管现有的许多基于Transformer框架的医学报告生成模型考虑了医学图像中的结构信息,但它们忽略了混杂因素对这些结构的干扰,这限制了模型有效捕获丰富和关键病变信息的能力。此外,这些模型往往难以解决实际报告中正常和异常内容之间的显著不平衡,从而导致准确描述异常的挑战。为了解决这些限制,我们提出了放射学报告生成模型的多模式快速协作机制(MPCM-RRG)。该模型由三个关键部分组成:视觉因果提示模块(VCP)、文本提示引导特征增强模块(TPGF)和视觉文本语义一致性模块(VTSC)。VCP模块使用胸部x射线面罩作为视觉提示,并结合因果推理原则,以帮助模型最小化不相关区域的影响。通过因果干预,模型可以学习到图像中病理区域与报告中描述的相应结果之间的因果关系。TPGF模块通过整合详细的文本提示来解决异常和正常文本之间的不平衡问题,这些提示还使用多头注意机制引导模型关注病变区域。VTSC模块通过对比一致性损失促进了视觉和文本表示之间的一致性,促进了视觉和文本提示之间的更大互动和协作。实验结果表明,MPCM-RRG在IU x射线和MIMIC-CXR数据集上优于其他方法,突出了其在生成高质量医疗报告方面的有效性。本文章由计算机程序翻译,如有差异,请以英文原文为准。

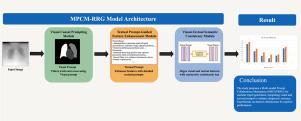

MPCM-RRG: Multi-modal Prompt Collaboration Mechanism for Radiology Report Generation

The task of medical report generation involves automatically creating descriptive text reports from medical images, with the aim of alleviating the workload of physicians and enhancing diagnostic efficiency. However, although many existing medical report generation models based on the Transformer framework consider structural information in medical images, they ignore the interference of confounding factors on these structures, which limits the model’s ability to effectively capture rich and critical lesion information. Furthermore, these models often struggle to address the significant imbalance between normal and abnormal content in actual reports, leading to challenges in accurately describing abnormalities. To address these limitations, we propose the Multi-modal Prompt Collaboration Mechanism for Radiology Report Generation Model (MPCM-RRG). This model consists of three key components: the Visual Causal Prompting Module (VCP), the Textual Prompt-Guided Feature Enhancement Module (TPGF), and the Visual–Textual Semantic Consistency Module (VTSC). The VCP module uses chest X-ray masks as visual prompts and incorporates causal inference principles to help the model minimize the influence of irrelevant regions. Through causal intervention, the model can learn the causal relationships between the pathological regions in the image and the corresponding findings described in the report. The TPGF module tackles the imbalance between abnormal and normal text by integrating detailed textual prompts, which also guide the model to focus on lesion areas using a multi-head attention mechanism. The VTSC module promotes alignment between the visual and textual representations through contrastive consistency loss, fostering greater interaction and collaboration between the visual and textual prompts. Experimental results demonstrate that MPCM-RRG outperforms other methods on the IU X-ray and MIMIC-CXR datasets, highlighting its effectiveness in generating high-quality medical reports.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Journal of Biomedical Informatics

医学-计算机:跨学科应用

CiteScore

8.90

自引率

6.70%

发文量

243

审稿时长

32 days

期刊介绍:

The Journal of Biomedical Informatics reflects a commitment to high-quality original research papers, reviews, and commentaries in the area of biomedical informatics methodology. Although we publish articles motivated by applications in the biomedical sciences (for example, clinical medicine, health care, population health, and translational bioinformatics), the journal emphasizes reports of new methodologies and techniques that have general applicability and that form the basis for the evolving science of biomedical informatics. Articles on medical devices; evaluations of implemented systems (including clinical trials of information technologies); or papers that provide insight into a biological process, a specific disease, or treatment options would generally be more suitable for publication in other venues. Papers on applications of signal processing and image analysis are often more suitable for biomedical engineering journals or other informatics journals, although we do publish papers that emphasize the information management and knowledge representation/modeling issues that arise in the storage and use of biological signals and images. System descriptions are welcome if they illustrate and substantiate the underlying methodology that is the principal focus of the report and an effort is made to address the generalizability and/or range of application of that methodology. Note also that, given the international nature of JBI, papers that deal with specific languages other than English, or with country-specific health systems or approaches, are acceptable for JBI only if they offer generalizable lessons that are relevant to the broad JBI readership, regardless of their country, language, culture, or health system.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: