打开人工智能黑盒子:先进能源应用的Kolmogorov-Arnold网络符号回归

IF 9.6

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

摘要

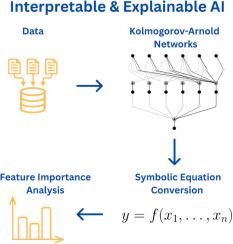

虽然大多数现代机器学习方法都提供了速度和准确性,但很少有人承诺可解释性或可解释性——这是医药、金融和工程等高度敏感行业所必需的两个关键特征。利用核能这一特别敏感的行业的8个数据集,这项工作比较了传统的前馈神经网络(FNN)和Kolmogorov-Arnold网络(KAN)。我们不仅考虑了模型的性能和准确性,还考虑了模型架构的可解释性和基于博弈论的特征重要性分析方法——事后SHapley加性解释(SHAP)分析的可解释性。在准确性方面,当输出维数有限时,我们发现KANs和fnn在所有数据集上都具有可比性。经过训练后转化为符号方程的KANs可以产生完美的可解释模型,而fnn仍然是黑盒子。最后,利用Kernel SHAP的事后可解释性结果,我们发现KANs从实验数据中学习真实的物理关系,而fnn只是产生统计准确的结果。总体而言,该分析发现KANs是传统机器学习方法的有前途的替代方法,特别是在需要准确性和可理解性的应用中。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Opening the AI black-box: Symbolic regression with Kolmogorov–Arnold Networks for advanced energy applications

While most modern machine learning methods offer speed and accuracy, few promise interpretability or explainability—two key features necessary for highly sensitive industries, like medicine, finance, and engineering. Using eight datasets representative of one especially sensitive industry, nuclear power, this work compares a traditional feedforward neural network (FNN) to a Kolmogorov–Arnold Network (KAN). We consider not only model performance and accuracy, but also interpretability through model architecture and explainability through a post-hoc SHapley Additive exPlanations (SHAP) analysis, a game-theory-based feature importance method. In terms of accuracy, we find KANs and FNNs comparable across all datasets when output dimensionality is limited. KANs, which transform into symbolic equations after training, yield perfectly interpretable models, while FNNs remain black-boxes. Finally, using the post-hoc explainability results from Kernel SHAP, we find that KANs learn real, physical relations from experimental data, while FNNs simply produce statistically accurate results. Overall, this analysis finds KANs a promising alternative to traditional machine learning methods, particularly in applications requiring both accuracy and comprehensibility.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Energy and AI

Engineering-Engineering (miscellaneous)

CiteScore

16.50

自引率

0.00%

发文量

64

审稿时长

56 days

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: