微创手术中用于单眼深度估计的协同手术器械分割

IF 11.8

1区 医学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

摘要

深度估计对于图像引导的外科手术至关重要,特别是在微创环境中,准确的3D感知至关重要。本文提出了一个两阶段的自监督单目深度估计框架,该框架将仪器分割作为任务级别,以增强空间理解。在第一阶段,分割和深度估计模型分别在RIS、SCARED数据集上进行训练,以捕获特定于任务的表示。第二阶段,将在dVPN数据集上预测的分割掩码与RGB输入融合,指导深度预测的细化。该框架采用共享编码器和多个解码器来实现跨任务的高效特性共享。RIS、SCARED、dVPN和SERV-CT数据集上的综合实验验证了该方法的有效性和可泛化性。结果表明,分割感知深度估计提高了具有挑战性的手术场景的几何推理,包括那些有闭塞,镜面区域。本文章由计算机程序翻译,如有差异,请以英文原文为准。

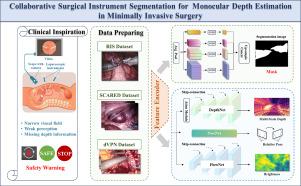

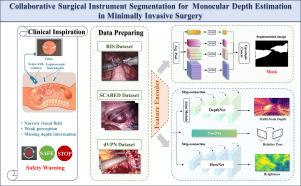

Collaborative surgical instrument segmentation for monocular depth estimation in minimally invasive surgery

Depth estimation is essential for image-guided surgical procedures, particularly in minimally invasive environments where accurate 3D perception is critical. This paper proposes a two-stage self-supervised monocular depth estimation framework that incorporates instrument segmentation as a task-level prior to enhance spatial understanding. In the first stage, segmentation and depth estimation models are trained separately on the RIS, SCARED datasets to capture task-specific representations. In the second stage, segmentation masks predicted on the dVPN dataset are fused with RGB inputs to guide the refinement of depth prediction.

The framework employs a shared encoder and multiple decoders to enable efficient feature sharing across tasks. Comprehensive experiments on the RIS, SCARED, dVPN, and SERV-CT datasets validate the effectiveness and generalizability of the proposed approach. The results demonstrate that segmentation-aware depth estimation improves geometric reasoning in challenging surgical scenes, including those with occlusions, specularities regions.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Medical image analysis

工程技术-工程:生物医学

CiteScore

22.10

自引率

6.40%

发文量

309

审稿时长

6.6 months

期刊介绍:

Medical Image Analysis serves as a platform for sharing new research findings in the realm of medical and biological image analysis, with a focus on applications of computer vision, virtual reality, and robotics to biomedical imaging challenges. The journal prioritizes the publication of high-quality, original papers contributing to the fundamental science of processing, analyzing, and utilizing medical and biological images. It welcomes approaches utilizing biomedical image datasets across all spatial scales, from molecular/cellular imaging to tissue/organ imaging.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: