基于模型的超声驱动自主微型机器人强化学习

IF 23.9

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

摘要

强化学习正在成为微型机器人控制的强大工具,因为它可以在传统控制方法无法实现的环境中实现自主导航。然而,将强化学习应用于微型机器人是困难的,因为需要大量的训练数据集,物理系统的缓慢收敛以及跨环境的较差泛化性。这些挑战在超声波驱动的微型机器人中被放大,这些机器人需要在高维动作空间中进行快速、精确的调整,这对于人类操作员来说往往过于复杂。解决这些挑战需要样本效率算法,以适应有限的数据,同时管理复杂的物理相互作用。为了应对这些挑战,我们实现了基于模型的强化学习,用于超声波驱动微型机器人的自主控制,该机器人可以从反复出现的想象环境中学习。我们的非侵入式,人工智能控制的微型机器人提供精确的推进,并在数据稀缺的环境中有效地从图像中学习。在从预训练的模拟环境过渡时,我们实现了样本效率的碰撞避免和通道导航,在一个小时的微调内,在多个通道的目标导航中达到90%的成功率。此外,我们的模型最初在新环境中成功地泛化了50%的任务,通过30,Äâmin的进一步训练提高到90%以上。我们进一步展示了在静态和流动条件下微型机器人在复杂血管系统中的实时操作,从而强调了人工智能在生物医学应用中彻底改变微型机器人的潜力。本文章由计算机程序翻译,如有差异,请以英文原文为准。

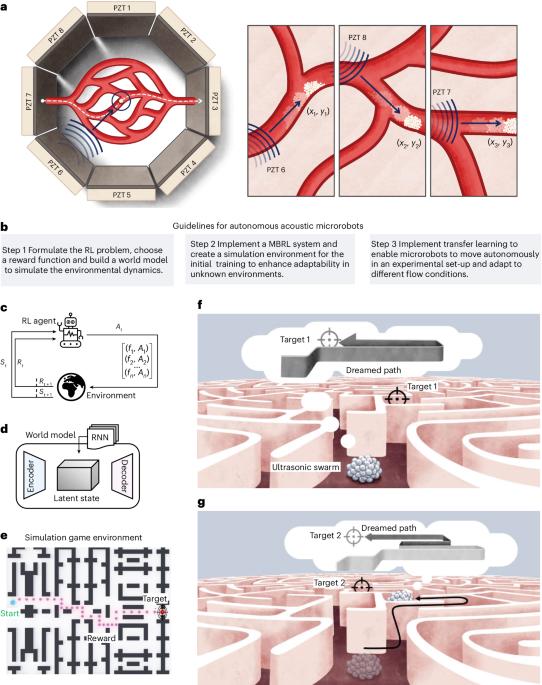

Model-based reinforcement learning for ultrasound-driven autonomous microrobots

Reinforcement learning is emerging as a powerful tool for microrobots control, as it enables autonomous navigation in environments where classical control approaches fall short. However, applying reinforcement learning to microrobotics is difficult due to the need for large training datasets, the slow convergence in physical systems and poor generalizability across environments. These challenges are amplified in ultrasound-actuated microrobots, which require rapid, precise adjustments in high-dimensional action space, which are often too complex for human operators. Addressing these challenges requires sample-efficient algorithms that adapt from limited data while managing complex physical interactions. To meet these challenges, we implemented model-based reinforcement learning for autonomous control of an ultrasound-driven microrobot, which learns from recurrent imagined environments. Our non-invasive, AI-controlled microrobot offers precise propulsion and efficiently learns from images in data-scarce environments. On transitioning from a pretrained simulation environment, we achieved sample-efficient collision avoidance and channel navigation, reaching a 90% success rate in target navigation across various channels within an hour of fine-tuning. Moreover, our model initially generalized successfully in 50% of tasks in new environments, improving to over 90% with 30 min of further training. We further demonstrated real-time manipulation of microrobots in complex vasculatures under both static and flow conditions, thus underscoring the potential of AI to revolutionize microrobotics in biomedical applications. Medany et al. present AI-driven microrobots that use ultrasound propulsion to learn how to navigate complex environments. These microrobots achieve 90% success after minimal training and adapt rapidly, showing promise for biomedical applications.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Nature Machine Intelligence

Multiple-

CiteScore

36.90

自引率

2.10%

发文量

127

期刊介绍:

Nature Machine Intelligence is a distinguished publication that presents original research and reviews on various topics in machine learning, robotics, and AI. Our focus extends beyond these fields, exploring their profound impact on other scientific disciplines, as well as societal and industrial aspects. We recognize limitless possibilities wherein machine intelligence can augment human capabilities and knowledge in domains like scientific exploration, healthcare, medical diagnostics, and the creation of safe and sustainable cities, transportation, and agriculture. Simultaneously, we acknowledge the emergence of ethical, social, and legal concerns due to the rapid pace of advancements.

To foster interdisciplinary discussions on these far-reaching implications, Nature Machine Intelligence serves as a platform for dialogue facilitated through Comments, News Features, News & Views articles, and Correspondence. Our goal is to encourage a comprehensive examination of these subjects.

Similar to all Nature-branded journals, Nature Machine Intelligence operates under the guidance of a team of skilled editors. We adhere to a fair and rigorous peer-review process, ensuring high standards of copy-editing and production, swift publication, and editorial independence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: