基于扩散的Kolmogorov-Arnold网络(KANs)弱光图像增强

IF 4.5

Q2 COMPUTER SCIENCE, THEORY & METHODS

引用次数: 0

摘要

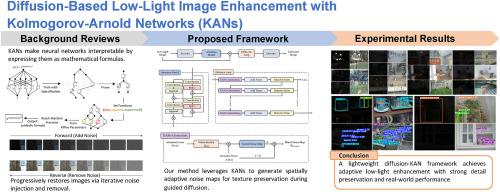

弱光图像增强是计算机视觉的一项基本任务,在自动驾驶、监视和航空成像等应用中发挥着关键作用。然而,低光图像通常会受到严重的噪声、细节丢失和对比度差的影响,从而降低视觉质量并阻碍下游任务。传统的基于稳定扩散的增强方法在去噪过程中均匀地在整个图像上施加噪声,导致纹理丰富区域不必要的细节退化。为了解决这一限制,我们提出了一种自适应噪声调制框架,将Kolmogorov-Arnold网络(KANs)集成到扩散过程中。与传统方法不同,我们的方法利用KANs来分析局部图像结构并有选择地控制噪声分布,确保在有效增强较暗区域的同时保留关键细节。该模型通过结构感知扩散机制迭代注入和去除噪声,逐步细化图像特征,实现稳定、高保真的恢复。在多个低光数据集上的大量实验表明,我们的方法在llo -v2数据集上实现了20.31 dB的PSNR和0.137 LPIPS,优于当前最先进的方法,如enlightenment gan和PairLIE。此外,我们的模型保持了很高的效率,只有0.08M参数和13.72G FLOPs,使其非常适合实际部署。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Diffusion-based low-light image enhancement with Kolmogorov-Arnold Networks (KANs)

Low-light image enhancement is a fundamental task in computer vision, playing a critical role in applications such as autonomous driving, surveillance, and aerial imaging. However, low-light images often suffer from severe noise, loss of detail, and poor contrast, which degrade visual quality and hinder downstream tasks. Traditional stable diffusion-based enhancement methods apply noise uniformly across the entire image during the denoising process, leading to unnecessary detail degradation in texture-rich areas. To address this limitation, we propose an adaptive noise modulation framework that integrates Kolmogorov-Arnold Networks (KANs) into the diffusion process. Unlike conventional approaches, our method leverages KANs to analyze local image structures and selectively control noise distribution, ensuring that critical details are preserved while effectively enhancing darker regions. By iteratively injecting and removing noise through a structure-aware diffusion mechanism, our model progressively refines image features, achieving stable and high-fidelity restoration. Extensive experiments on multiple low-light datasets demonstrate that our method achieves 20.31 dB PSNR and 0.137 LPIPS on the LOL-v2 dataset, outperforming state-of-the-art methods such as EnlightenGAN and PairLIE. Moreover, our model maintains high efficiency with only 0.08M parameters and 13.72G FLOPs, making it well-suited for real-world deployment.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: