评估通过多策略提示从胃镜和结肠镜报告中提取信息的大型语言模型。

IF 4.5

2区 医学

Q2 COMPUTER SCIENCE, INTERDISCIPLINARY APPLICATIONS

引用次数: 0

摘要

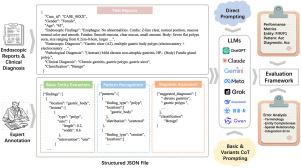

目的:通过快速工程系统评估大型语言模型(LLMs)在胃镜和结肠镜报告中自动信息提取的能力,解决其提取结构化信息、识别复杂模式和支持临床诊断推理的能力。方法:我们开发了一个包含三个层次任务的评估框架:基本实体提取、模式识别和诊断评估。该研究利用了162份内窥镜报告的数据集,并附有临床专家的结构化注释。各种语言模型,包括专有的、新兴的和开源的替代方案,在零试和少试学习范式下进行了评估。对于每个任务,实施了多种提示策略,包括直接提示和五个思维链(CoT)提示变体。结果:具有专门架构的大型模型在实体提取任务中取得了更好的性能,但在捕获空间关系和整合临床结果方面面临着显著的挑战。几次学习的有效性因模型和任务而异,较大的模型显示出更一致的改进模式。结论:这些发现为当前医学专业领域语言模型的能力和局限性提供了重要的见解,有助于开发更有效的临床文献分析系统。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Evaluating large language models for information extraction from gastroscopy and colonoscopy reports through multi-strategy prompting

Objective:

To systematically evaluate large language models (LLMs) for automated information extraction from gastroscopy and colonoscopy reports through prompt engineering, addressing their ability to extract structured information, recognize complex patterns, and support diagnostic reasoning in clinical contexts.

Methods:

We developed an evaluation framework incorporating three hierarchical tasks: basic entity extraction, pattern recognition, and diagnostic assessment. The study utilized a dataset of 162 endoscopic reports with structured annotations from clinical experts. Various language models, including proprietary, emerging, and open-source alternatives, were evaluated under both zero-shot and few-shot learning paradigms. For each task, multiple prompting strategies were implemented, including direct prompting and five Chain-of-Thought (CoT) prompting variants.

Results:

Larger models with specialized architectures achieved better performance in entity extraction tasks but faced notable challenges in capturing spatial relationships and integrating clinical findings. The effectiveness of few-shot learning varied across models and tasks, with larger models showing more consistent improvement patterns.

Conclusion:

These findings provide important insights into the current capabilities and limitations of language models in specialized medical domains, contributing to the development of more effective clinical documentation analysis systems.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Journal of Biomedical Informatics

医学-计算机:跨学科应用

CiteScore

8.90

自引率

6.70%

发文量

243

审稿时长

32 days

期刊介绍:

The Journal of Biomedical Informatics reflects a commitment to high-quality original research papers, reviews, and commentaries in the area of biomedical informatics methodology. Although we publish articles motivated by applications in the biomedical sciences (for example, clinical medicine, health care, population health, and translational bioinformatics), the journal emphasizes reports of new methodologies and techniques that have general applicability and that form the basis for the evolving science of biomedical informatics. Articles on medical devices; evaluations of implemented systems (including clinical trials of information technologies); or papers that provide insight into a biological process, a specific disease, or treatment options would generally be more suitable for publication in other venues. Papers on applications of signal processing and image analysis are often more suitable for biomedical engineering journals or other informatics journals, although we do publish papers that emphasize the information management and knowledge representation/modeling issues that arise in the storage and use of biological signals and images. System descriptions are welcome if they illustrate and substantiate the underlying methodology that is the principal focus of the report and an effort is made to address the generalizability and/or range of application of that methodology. Note also that, given the international nature of JBI, papers that deal with specific languages other than English, or with country-specific health systems or approaches, are acceptable for JBI only if they offer generalizable lessons that are relevant to the broad JBI readership, regardless of their country, language, culture, or health system.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: