SigPhi-Med:用于生物医学的轻量级视觉语言助手

IF 4.5

2区 医学

Q2 COMPUTER SCIENCE, INTERDISCIPLINARY APPLICATIONS

引用次数: 0

摘要

背景:通用多模态大语言模型(mllm)的最新进展已经导致生物医学mllm在不同医疗任务中的性能有了实质性的改善,显示出重大的变革潜力。然而,mllm中大量的参数在训练和推理阶段都需要大量的计算资源,从而限制了它们在资源有限的临床环境中的可行性。本研究旨在开发一种轻量级的生物医学多模态小语言模型(MSLM)来减轻这一限制。方法:我们将mllm中的大语言模型(LLM)替换为小语言模型(SLM),使参数数量显著减少。为了确保模型在生物医学任务中保持良好的性能,我们系统地分析了生物医学mslm的关键组成部分,包括SLM、视觉编码器、训练策略和训练数据对模型性能的影响。基于这些分析,我们为模型实现了特定的优化。结果:实验表明,生物医学mslm的性能受SLM分量的参数计数、视觉编码器分量的预训练策略和分辨率以及训练数据的质量和数量的显著影响。与包括LLaVA-Med-v1.5 (7B)、LLaVA-Med (13B)和Med-MoE (2.7B × 4)在内的几个最先进的模型相比,我们的优化模型SigPhi-Med仅使用4.2B参数,在VQA- rad、SLAKE和Path-VQA医学视觉问答(VQA)基准测试中取得了显著优于其他模型的整体性能。结论:本研究强调了生物医学mslm在生物医学应用中的巨大潜力,为在医疗保健环境中部署人工智能助手提供了一种更具成本效益的方法。此外,我们对mslm关键组件的分析为它们在其他专业领域的开发提供了有价值的见解。我们的代码可在https://github.com/NyKxo1/SigPhi-Med上获得。本文章由计算机程序翻译,如有差异,请以英文原文为准。

SigPhi-Med: A lightweight vision-language assistant for biomedicine

Background:

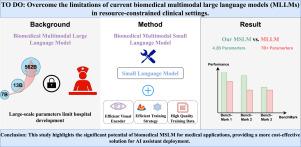

Recent advancements in general multimodal large language models (MLLMs) have led to substantial improvements in the performance of biomedical MLLMs across diverse medical tasks, exhibiting significant transformative potential. However, the large number of parameters in MLLMs necessitates substantial computational resources during both training and inference stages, thereby limiting their feasibility in resource-constrained clinical settings. This study aims to develop a lightweight biomedical multimodal small language model (MSLM) to mitigate this limitation.

Methods:

We replaced the large language model (LLM) in MLLMs with the small language model (SLM), resulting in a significant reduction in the number of parameters. To ensure that the model maintains strong performance on biomedical tasks, we systematically analyzed the effects of key components of biomedical MSLMs, including the SLM, vision encoder, training strategy, and training data, on model performance. Based on these analyses, we implemented specific optimizations for the model.

Results:

Experiments demonstrate that the performance of biomedical MSLMs is significantly influenced by the parameter count of the SLM component, the pre-training strategy and resolution of the vision encoder component, and both the quality and quantity of the training data. Compared to several state-of-the-art models, including LLaVA-Med-v1.5 (7B), LLaVA-Med (13B) and Med-MoE (2.7B × 4), our optimized model, SigPhi-Med, with only 4.2B parameters, achieves significantly superior overall performance across the VQA-RAD, SLAKE, and Path-VQA medical visual question-answering (VQA) benchmarks.

Conclusions:

This study highlights the significant potential of biomedical MSLMs in biomedical applications, presenting a more cost-effective approach for deploying AI assistants in healthcare settings. Additionally, our analysis of MSLMs key components provides valuable insights for their development in other specialized domains. Our code is available at https://github.com/NyKxo1/SigPhi-Med.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Journal of Biomedical Informatics

医学-计算机:跨学科应用

CiteScore

8.90

自引率

6.70%

发文量

243

审稿时长

32 days

期刊介绍:

The Journal of Biomedical Informatics reflects a commitment to high-quality original research papers, reviews, and commentaries in the area of biomedical informatics methodology. Although we publish articles motivated by applications in the biomedical sciences (for example, clinical medicine, health care, population health, and translational bioinformatics), the journal emphasizes reports of new methodologies and techniques that have general applicability and that form the basis for the evolving science of biomedical informatics. Articles on medical devices; evaluations of implemented systems (including clinical trials of information technologies); or papers that provide insight into a biological process, a specific disease, or treatment options would generally be more suitable for publication in other venues. Papers on applications of signal processing and image analysis are often more suitable for biomedical engineering journals or other informatics journals, although we do publish papers that emphasize the information management and knowledge representation/modeling issues that arise in the storage and use of biological signals and images. System descriptions are welcome if they illustrate and substantiate the underlying methodology that is the principal focus of the report and an effort is made to address the generalizability and/or range of application of that methodology. Note also that, given the international nature of JBI, papers that deal with specific languages other than English, or with country-specific health systems or approaches, are acceptable for JBI only if they offer generalizable lessons that are relevant to the broad JBI readership, regardless of their country, language, culture, or health system.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: