组合预训练提高了计算效率,并在复杂任务中匹配动物行为

IF 23.9

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

摘要

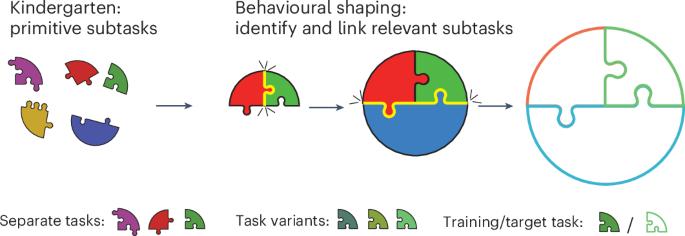

递归神经网络(RNNs)在神经科学中被广泛用于捕捉生命系统的神经动力学和行为。然而,当涉及到复杂的认知任务时,用传统方法训练rnn可能被证明是困难的,并且无法捕捉动物行为的关键方面。在这里,我们提出了一种原则性的方法来识别和整合组成任务作为RNN训练的一部分。我们以先前在大鼠中研究过的一个时间赌注任务为目标,设计了一个反映相关子计算的更简单认知任务的预训练课程,我们称之为“幼儿园课程学习”。我们表明,这种预训练大大提高了学习效率,对于rnn采用与大鼠相似的策略至关重要,包括传统预训练方法无法捕获的潜在状态的长时间推断。从机制上讲,我们的预训练支持实现推理和基于价值的决策所需的慢动态系统特征的开发。总的来说,我们的方法有助于赋予rnn相关的归纳偏差,这在建模依赖于多种认知功能的复杂行为时非常重要。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Compositional pretraining improves computational efficiency and matches animal behaviour on complex tasks

Recurrent neural networks (RNNs) are ubiquitously used in neuroscience to capture both neural dynamics and behaviours of living systems. However, when it comes to complex cognitive tasks, training RNNs with traditional methods can prove difficult and fall short of capturing crucial aspects of animal behaviour. Here we propose a principled approach for identifying and incorporating compositional tasks as part of RNN training. Taking as the target a temporal wagering task previously studied in rats, we design a pretraining curriculum of simpler cognitive tasks that reflect relevant subcomputations, which we term ‘kindergarten curriculum learning’. We show that this pretraining substantially improves learning efficacy and is critical for RNNs to adopt similar strategies as rats, including long-timescale inference of latent states, which conventional pretraining approaches fail to capture. Mechanistically, our pretraining supports the development of slow dynamical systems features needed for implementing both inference and value-based decision making. Overall, our approach helps endow RNNs with relevant inductive biases, which is important when modelling complex behaviours that rely on multiple cognitive functions. Hocker et al. demonstrate a method for training recurrent neural networks, which they call ‘kindergarten curriculum learning’, involving pretraining on simple cognitive tasks to improve learning efficiency. This approach helps recurrent neural networks to mimic animal behaviour in solving complex tasks.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Nature Machine Intelligence

Multiple-

CiteScore

36.90

自引率

2.10%

发文量

127

期刊介绍:

Nature Machine Intelligence is a distinguished publication that presents original research and reviews on various topics in machine learning, robotics, and AI. Our focus extends beyond these fields, exploring their profound impact on other scientific disciplines, as well as societal and industrial aspects. We recognize limitless possibilities wherein machine intelligence can augment human capabilities and knowledge in domains like scientific exploration, healthcare, medical diagnostics, and the creation of safe and sustainable cities, transportation, and agriculture. Simultaneously, we acknowledge the emergence of ethical, social, and legal concerns due to the rapid pace of advancements.

To foster interdisciplinary discussions on these far-reaching implications, Nature Machine Intelligence serves as a platform for dialogue facilitated through Comments, News Features, News & Views articles, and Correspondence. Our goal is to encourage a comprehensive examination of these subjects.

Similar to all Nature-branded journals, Nature Machine Intelligence operates under the guidance of a team of skilled editors. We adhere to a fair and rigorous peer-review process, ensuring high standards of copy-editing and production, swift publication, and editorial independence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: