基于强化学习的图神经网络对抗性防御安全训练

IF 6.5

2区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

摘要

图神经网络(gnn)的安全性对于确保在实际应用中集成的系统的可靠性和保护至关重要。然而,目前的方法缺乏阻止gnn学习高风险信息的能力,包括边、节点、卷积等。在本文中,我们提出了一个安全的GNN学习框架,称为基于强化学习的安全训练算法。我们首先介绍了一种模型转换技术,将gnn的训练过程转换为可验证的马尔可夫决策过程模型。为了保证模型的安全性,我们采用了深度Q-Learning算法来防止高风险的信息消息。此外,为了验证由Deep Q-Learning算法导出的策略是否满足安全要求,我们设计了一种模型转换算法,将mdp转换为概率验证模型,从而通过形式化验证工具确保我们方法的安全性。在对抗性攻击图下,开源数据集的平均准确率提高了6.4%,证明了该方法的有效性和可行性。本文章由计算机程序翻译,如有差异,请以英文原文为准。

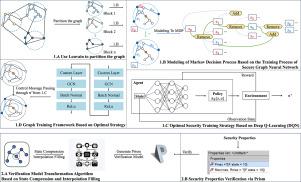

Reinforcement learning-based secure training for adversarial defense in graph neural networks

The security of Graph Neural Networks (GNNs) is crucial for ensuring the reliability and protection of the systems they are integrated within real-world applications. However, current approaches lack the ability to prevent GNNs from learning high-risk information, including edges, nodes, convolutions, etc. In this paper, we propose a secure GNN learning framework called Reinforcement Learning-based Secure Training Algorithm. We first introduce a model conversion technique that transforms the training process of GNNs into a verifiable Markov Decision Process model. To maintain the security of model we employ Deep Q-Learning algorithm to prevent high-risk information messages. Additionally, to verify whether the strategy derived from Deep Q-Learning algorithm meets safety requirements, we design a model transformation algorithm that converts MDPs into probabilistic verification models, thereby ensuring our method’s security through formal verification tools. The effectiveness and feasibility of our proposed method are demonstrated by achieving a 6.4% improvement in average accuracy on open-source datasets under adversarial attack graphs.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Neurocomputing

工程技术-计算机:人工智能

CiteScore

13.10

自引率

10.00%

发文量

1382

审稿时长

70 days

期刊介绍:

Neurocomputing publishes articles describing recent fundamental contributions in the field of neurocomputing. Neurocomputing theory, practice and applications are the essential topics being covered.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: