探索文本监督之外的可扩展医学影像编码器

IF 18.8

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

摘要

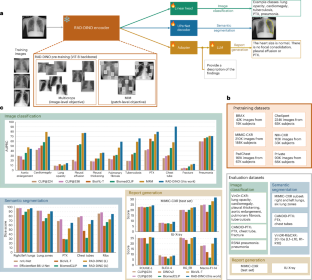

语言监督预训练已被证明是从图像中提取语义特征的一种有价值的方法,是计算机视觉和医学成像领域中多模态系统的基础元素。然而,计算的特征受到文本中包含的信息的限制,这在医学成像中尤其成问题,在医学成像中,放射科医生描述的发现集中在特定的观察上。由于担心个人健康信息泄露,配对图像-文本数据的稀缺加剧了这一挑战。在这项工作中,我们从根本上挑战了普遍依赖语言监督来学习通用生物医学成像编码器。我们介绍RAD-DINO,一种生物医学图像编码器,仅对单峰生物医学成像数据进行预训练,在各种基准测试中获得与最先进的生物医学语言监督模型相似或更高的性能。具体来说,学习表征的质量在标准成像任务(分类和语义分割)和视觉语言对齐任务(从图像生成文本报告)上进行评估。为了进一步证明语言监督的缺点,我们表明RAD-DINO的特征与其他医疗记录(例如,性别或年龄)的相关性比语言监督模型更好,而语言监督模型通常不会在放射学报告中提到。最后,我们进行了一系列的消融,确定了影响RAD-DINO性能的因素。特别是,我们观察到RAD-DINO的下游性能随训练数据的数量和多样性而良好地扩展,这表明仅图像监督是一种可扩展的训练基础生物医学图像编码器的方法。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Exploring scalable medical image encoders beyond text supervision

Language-supervised pretraining has proven to be a valuable method for extracting semantically meaningful features from images, serving as a foundational element in multimodal systems within the computer vision and medical imaging domains. However, the computed features are limited by the information contained in the text, which is particularly problematic in medical imaging, in which the findings described by radiologists focus on specific observations. This challenge is compounded by the scarcity of paired imaging–text data due to concerns over the leakage of personal health information. In this work, we fundamentally challenge the prevailing reliance on language supervision for learning general-purpose biomedical imaging encoders. We introduce RAD-DINO, a biomedical image encoder pretrained solely on unimodal biomedical imaging data that obtains similar or greater performance than state-of-the-art biomedical-language-supervised models on a diverse range of benchmarks. Specifically, the quality of learned representations is evaluated on standard imaging tasks (classification and semantic segmentation), and a vision–language alignment task (text report generation from images). To further demonstrate the drawback of language supervision, we show that features from RAD-DINO correlate with other medical records (for example, sex or age) better than language-supervised models, which are generally not mentioned in radiology reports. Finally, we conduct a series of ablations determining the factors in RAD-DINO’s performance. In particular, we observe that RAD-DINO’s downstream performance scales well with the quantity and diversity of training data, demonstrating that image-only supervision is a scalable approach for training a foundational biomedical image encoder. Reliance on text supervision for biomedical image encoders is investigated. The proposed RAD-DINO, pretrained solely on unimodal data, achieves similar or greater performance than state-of-the-art multimodal models on various benchmarks.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Nature Machine Intelligence

Multiple-

CiteScore

36.90

自引率

2.10%

发文量

127

期刊介绍:

Nature Machine Intelligence is a distinguished publication that presents original research and reviews on various topics in machine learning, robotics, and AI. Our focus extends beyond these fields, exploring their profound impact on other scientific disciplines, as well as societal and industrial aspects. We recognize limitless possibilities wherein machine intelligence can augment human capabilities and knowledge in domains like scientific exploration, healthcare, medical diagnostics, and the creation of safe and sustainable cities, transportation, and agriculture. Simultaneously, we acknowledge the emergence of ethical, social, and legal concerns due to the rapid pace of advancements.

To foster interdisciplinary discussions on these far-reaching implications, Nature Machine Intelligence serves as a platform for dialogue facilitated through Comments, News Features, News & Views articles, and Correspondence. Our goal is to encourage a comprehensive examination of these subjects.

Similar to all Nature-branded journals, Nature Machine Intelligence operates under the guidance of a team of skilled editors. We adhere to a fair and rigorous peer-review process, ensuring high standards of copy-editing and production, swift publication, and editorial independence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: