通过本地能源市场和深度强化学习分散协调分布式能源资源

IF 9.6

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

摘要

随着能源环境向可持续方向发展,分布式能源的加速整合对电网的可操作性和可靠性提出了挑战。这一问题的一个重要方面是电网边缘的净负荷可变性显著增加。通过本地能源市场实施的交互式能源最近引起了人们的关注,它是一种有前途的解决方案,可以在社区层面以分散、间接需求响应的形式应对电网挑战。无模型控制方法,如深度强化学习(DRL),显示了在此背景下分散式自动化参与的前景。本研究通过训练一组深度强化学习代理,使最终用户自动参与经济驱动的自主本地能源市场(ALEX),弥补了这一空白。在这种情况下,代理不会共享信息,只会优先优化个人账单。研究揭示了账单减少与净负荷变化减少之间的明显相关性。在不同的时间跨度内,使用斜率、日和月负荷率以及开放源数据集上的日均和总峰值进出口等指标评估了对净负荷变异性的影响。为考察所建议的 DRL 方法的性能,以无控制情景为基准,将其代理与接近最优的动态编程方法进行了比较。动态编程基准将日均进口、出口和峰值需求分别降低了 22.05%、83.92% 和 24.09%。RL 代理在这些指标上分别提高了 21.93%、84.46% 和 27.02%,性能相当或更优。这表明 DRL 可以有效地用于此类任务,因为它们本身具有可扩展性,在分散式电网管理中具有接近最佳的性能。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Decentralized coordination of distributed energy resources through local energy markets and deep reinforcement learning

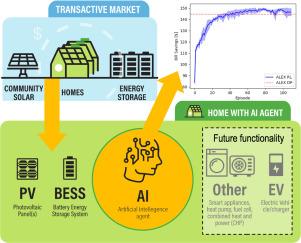

As the energy landscape evolves towards sustainability, the accelerating integration of distributed energy resources poses challenges to the operability and reliability of the electricity grid. One significant aspect of this issue is the notable increase in net load variability at the grid edge.

Transactive energy, implemented through local energy markets, has recently garnered attention as a promising solution to address the grid challenges in the form of decentralized, indirect demand response on a community level. Model-free control approaches, such as deep reinforcement learning (DRL), show promise for the decentralized automation of participation within this context. Existing studies at the intersection of transactive energy and model-free control primarily focus on socioeconomic and self-consumption metrics, overlooking the crucial goal of reducing community-level net load variability.

This study addresses this gap by training a set of deep reinforcement learning agents to automate end-user participation in an economy-driven, autonomous local energy market (ALEX). In this setting, agents do not share information and only prioritize individual bill optimization. The study unveils a clear correlation between bill reduction and reduced net load variability. The impact on net load variability is assessed over various time horizons using metrics such as ramping rate, daily and monthly load factor, as well as daily average and total peak export and import on an open-source dataset.

To examine the performance of the proposed DRL method, its agents are benchmarked against a near-optimal dynamic programming method, using a no-control scenario as the baseline. The dynamic programming benchmark reduces average daily import, export, and peak demand by 22.05%, 83.92%, and 24.09%, respectively. The RL agents demonstrate comparable or superior performance, with improvements of 21.93%, 84.46%, and 27.02% on these metrics. This demonstrates that DRL can be effectively employed for such tasks, as they are inherently scalable with near-optimal performance in decentralized grid management.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Energy and AI

Engineering-Engineering (miscellaneous)

CiteScore

16.50

自引率

0.00%

发文量

64

审稿时长

56 days

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: