用于识别人工智能/机器学习模型可能表现不佳的患者亚群的数据驱动框架

IF 12.4

1区 医学

Q1 HEALTH CARE SCIENCES & SERVICES

引用次数: 0

摘要

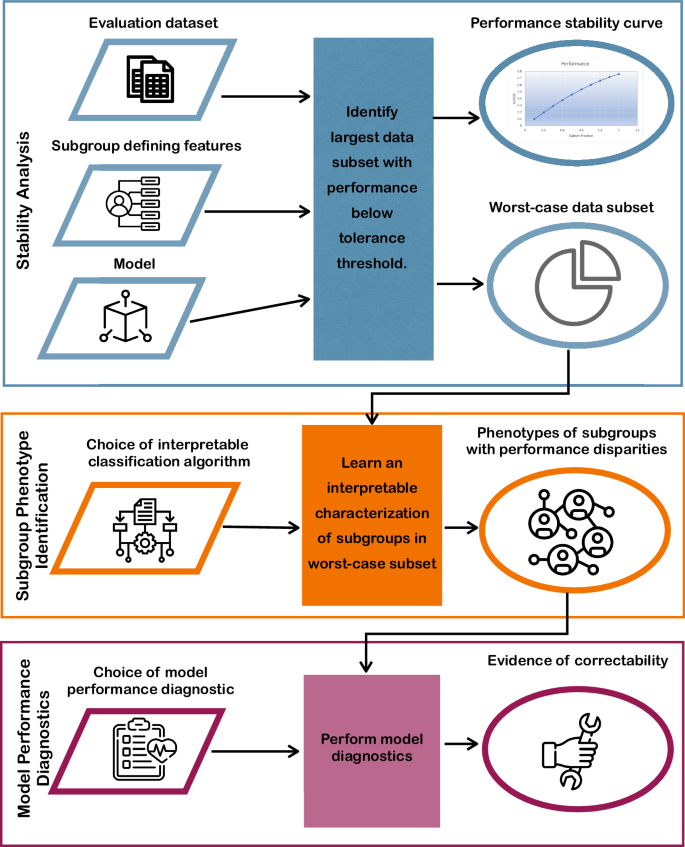

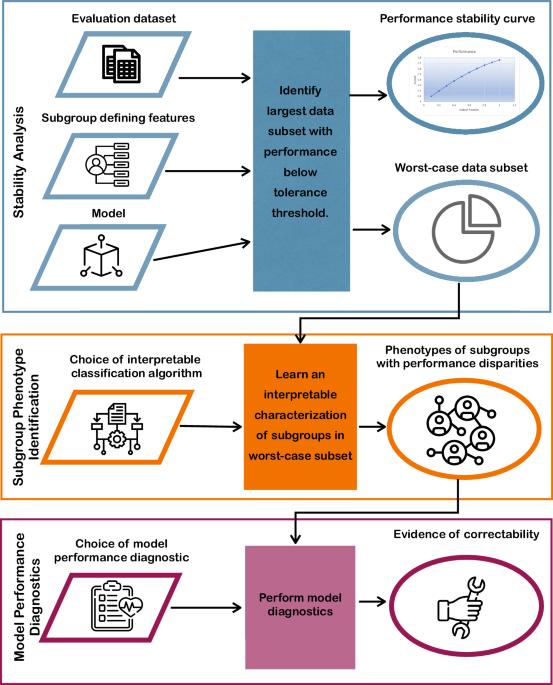

评估临床模型性能的一个基本目标是确保该模型在不同的预期患者群体中表现良好。一个主要挑战是,用于模型开发和测试的数据通常由许多重叠、异质的患者亚群组成,这些亚群可能没有明确定义或标记。虽然模型在数据集上的平均性能可能很高,但对于某些亚组,模型的性能可能会明显降低,这可能很难检测到。我们介绍了一种用于识别具有潜在性能差异的亚组的算法框架(AFISP),它能产生一组与模型性能可能相对较低的亚组相对应的可解释表型。这可以让模型评估人员(包括开发人员和用户)在大规模部署之前识别可能的故障模式。我们将 AFISP 应用于一个病人病情恶化模型,以检测显著的亚组性能差异,从而说明了 AFISP 的应用,并表明 AFISP 比现有算法方法的可扩展性要高得多。本文章由计算机程序翻译,如有差异,请以英文原文为准。

A data-driven framework for identifying patient subgroups on which an AI/machine learning model may underperform

A fundamental goal of evaluating the performance of a clinical model is to ensure it performs well across a diverse intended patient population. A primary challenge is that the data used in model development and testing often consist of many overlapping, heterogeneous patient subgroups that may not be explicitly defined or labeled. While a model’s average performance on a dataset may be high, the model can have significantly lower performance for certain subgroups, which may be hard to detect. We describe an algorithmic framework for identifying subgroups with potential performance disparities (AFISP), which produces a set of interpretable phenotypes corresponding to subgroups for which the model’s performance may be relatively lower. This could allow model evaluators, including developers and users, to identify possible failure modes prior to wide-scale deployment. We illustrate the application of AFISP by applying it to a patient deterioration model to detect significant subgroup performance disparities, and show that AFISP is significantly more scalable than existing algorithmic approaches.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

NPJ Digital Medicine

Multiple-

CiteScore

25.10

自引率

3.30%

发文量

170

审稿时长

15 weeks

期刊介绍:

npj Digital Medicine is an online open-access journal that focuses on publishing peer-reviewed research in the field of digital medicine. The journal covers various aspects of digital medicine, including the application and implementation of digital and mobile technologies in clinical settings, virtual healthcare, and the use of artificial intelligence and informatics.

The primary goal of the journal is to support innovation and the advancement of healthcare through the integration of new digital and mobile technologies. When determining if a manuscript is suitable for publication, the journal considers four important criteria: novelty, clinical relevance, scientific rigor, and digital innovation.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: