用于可解释医学图像分类的距离引导生成对抗网络。

IF 5.4

2区 医学

Q1 ENGINEERING, BIOMEDICAL

Computerized Medical Imaging and Graphics

Pub Date : 2024-10-15

DOI:10.1016/j.compmedimag.2024.102444

引用次数: 0

摘要

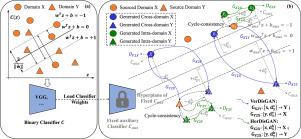

尽管数据扩增在缓解数据不足方面具有潜在优势,但传统的扩增方法主要依赖于先前的域内知识。另一方面,先进的生成式对抗网络(GAN)生成的域间样本种类有限。这些方法对描述二元分类的决策边界贡献有限。在本文中,我们提出了一种距离引导生成式对抗网络(DisGAN),它能控制超平面空间中生成样本的变化度。具体来说,我们通过结合两种方式来实现 DisGAN 的想法。第一种方式是垂直距离 GAN(VerDisGAN),其中域间生成以垂直距离为条件。第二种方法是水平距离 GAN(HorDisGAN),域内生成以水平距离为条件。此外,VerDisGAN 可以通过将源图像映射到超平面来生成特定类别的区域。实验结果表明,DisGAN 在可解释的二元分类方面始终优于基于 GAN 的增强方法。所提出的方法可适用于不同的分类架构,并有可能扩展到多类分类。我们在 https://github.com/yXiangXiong/DisGAN 中提供了代码。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Distance guided generative adversarial network for explainable medical image classifications

Despite the potential benefits of data augmentation for mitigating data insufficiency, traditional augmentation methods primarily rely on prior intra-domain knowledge. On the other hand, advanced generative adversarial networks (GANs) generate inter-domain samples with limited variety. These previous methods make limited contributions to describing the decision boundaries for binary classification. In this paper, we propose a distance-guided GAN (DisGAN) that controls the variation degrees of generated samples in the hyperplane space. Specifically, we instantiate the idea of DisGAN by combining two ways. The first way is vertical distance GAN (VerDisGAN) where the inter-domain generation is conditioned on the vertical distances. The second way is horizontal distance GAN (HorDisGAN) where the intra-domain generation is conditioned on the horizontal distances. Furthermore, VerDisGAN can produce the class-specific regions by mapping the source images to the hyperplane. Experimental results show that DisGAN consistently outperforms the GAN-based augmentation methods with explainable binary classification. The proposed method can apply to different classification architectures and has the potential to extend to multi-class classification. We provide the code in https://github.com/yXiangXiong/DisGAN.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

CiteScore

10.70

自引率

3.50%

发文量

71

审稿时长

26 days

期刊介绍:

The purpose of the journal Computerized Medical Imaging and Graphics is to act as a source for the exchange of research results concerning algorithmic advances, development, and application of digital imaging in disease detection, diagnosis, intervention, prevention, precision medicine, and population health. Included in the journal will be articles on novel computerized imaging or visualization techniques, including artificial intelligence and machine learning, augmented reality for surgical planning and guidance, big biomedical data visualization, computer-aided diagnosis, computerized-robotic surgery, image-guided therapy, imaging scanning and reconstruction, mobile and tele-imaging, radiomics, and imaging integration and modeling with other information relevant to digital health. The types of biomedical imaging include: magnetic resonance, computed tomography, ultrasound, nuclear medicine, X-ray, microwave, optical and multi-photon microscopy, video and sensory imaging, and the convergence of biomedical images with other non-imaging datasets.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: