利用部分观测数据在高干扰环境中对机翼俯仰控制进行深度强化学习

IF 2.5

3区 物理与天体物理

Q2 PHYSICS, FLUIDS & PLASMAS

引用次数: 0

摘要

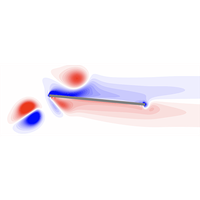

本研究探索了深度强化学习(RL)在机翼俯仰控制器设计中的应用,该控制器能够最大限度地减少随机扰动气流中的升力变化。控制器被视为部分可观测马尔可夫决策过程中的一个代理,接收来自环境的非马尔可夫观测数据,模拟实际限制条件,即流动信息仅限于力和压力传感器。深度 RL,特别是 TD3 算法,被用来近似这种条件下的最优控制策略。在两种环境下对平板翼面进行了测试:一种是具有垂直加速度干扰的经典非稳态环境(即瓦格纳设置),另一种是具有脉冲点力干扰的粘性流模型。在这两种情况下,利用额外的尾流信息(如来自压力传感器的信息)增强对升力、俯仰角和角速度的观测,并保留对过去观测的记忆,都能提高 RL 控制性能。结果表明,在最大限度地减少升力变化方面,RL 控制能够与标准线性控制器相媲美,甚至更胜一筹。对训练数据的选择和对未知干扰的泛化给予了特别关注。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Deep reinforcement learning of airfoil pitch control in a highly disturbed environment using partial observations

This study explores the application of deep reinforcement learning (RL) to design an airfoil pitch controller capable of minimizing lift variations in randomly disturbed flows. The controller, treated as an agent in a partially observable Markov decision process, receives non-Markovian observations from the environment, simulating practical constraints where flow information is limited to force and pressure sensors. Deep RL, particularly the TD3 algorithm, is used to approximate an optimal control policy under such conditions. Testing is conducted for a flat plate airfoil in two environments: a classical unsteady environment with vertical acceleration disturbances (i.e., a Wagner setup) and a viscous flow model with pulsed point force disturbances. In both cases, augmenting observations of the lift, pitch angle, and angular velocity with extra wake information (e.g., from pressure sensors) and retaining memory of past observations enhances RL control performance. Results demonstrate the capability of RL control to match or exceed standard linear controllers in minimizing lift variations. Special attention is given to the choice of training data and the generalization to unseen disturbances.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Physical Review Fluids

Chemical Engineering-Fluid Flow and Transfer Processes

CiteScore

5.10

自引率

11.10%

发文量

488

期刊介绍:

Physical Review Fluids is APS’s newest online-only journal dedicated to publishing innovative research that will significantly advance the fundamental understanding of fluid dynamics. Physical Review Fluids expands the scope of the APS journals to include additional areas of fluid dynamics research, complements the existing Physical Review collection, and maintains the same quality and reputation that authors and subscribers expect from APS. The journal is published with the endorsement of the APS Division of Fluid Dynamics.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: