TrackingMamba:用于物体跟踪的视觉状态空间模型

IF 4.7

2区 地球科学

Q1 ENGINEERING, ELECTRICAL & ELECTRONIC

IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing

Pub Date : 2024-09-11

DOI:10.1109/JSTARS.2024.3458938

引用次数: 0

摘要

近年来,无人机物体跟踪为各个领域提供了技术支持。现有工作大多依赖卷积神经网络(CNN)或视觉变换器。然而,卷积神经网络的感受野有限,导致性能不理想,而变换器需要大量的计算资源,使得训练和推理具有挑战性。山地和丛林环境是地球表面的重要组成部分,也是无人飞行器物体跟踪的关键场景,由于地形陡峭、植被茂密、天气条件瞬息万变,这给无人飞行器跟踪带来了独特的挑战。相关数据集的缺乏进一步降低了跟踪精度。本文介绍了一种基于状态空间模型的新型跟踪框架,名为 TrackingMamba,它采用以 Vision Mamba 为骨干的单流跟踪架构。TrackingMamba 不仅在全局特征提取和长距离依赖建模方面与基于变压器的跟踪器不相上下,而且还能保持线性增长的计算效率。与其他先进的跟踪器相比,TrackingMamba 以更简单的模型框架、更少的参数和更低的 FLOPs 实现了更高的精度。具体来说,在 UAV123 基准测试中,TrackingMamba 的表现优于基线模型 OSTtrack-256,AUC 提高了 2.59%,精度提高了 4.42%,同时参数减少了 95.52%,FLOP 减少了 95.02%。文章还评估了 TrackingMamba 和其他先进跟踪器在复杂而关键的丛林环境中的性能和不足,并探讨了无人机丛林物体跟踪的潜在未来研究方向。本文章由计算机程序翻译,如有差异,请以英文原文为准。

TrackingMamba: Visual State Space Model for Object Tracking

In recent years, UAV object tracking has provided technical support across various fields. Most existing work relies on convolutional neural networks (CNNs) or visual transformers. However, CNNs have limited receptive fields, resulting in suboptimal performance, while transformers require substantial computational resources, making training and inference challenging. Mountainous and jungle environments-critical components of the Earth's surface and key scenarios for UAV object tracking-present unique challenges due to steep terrain, dense vegetation, and rapidly changing weather conditions, which complicate UAV tracking. The lack of relevant datasets further reduces tracking accuracy. This article introduces a new tracking framework based on a state-space model called TrackingMamba, which uses a single-stream tracking architecture with Vision Mamba as its backbone. TrackingMamba not only matches transformer-based trackers in global feature extraction and long-range dependence modeling but also maintains computational efficiency with linear growth. Compared to other advanced trackers, TrackingMamba delivers higher accuracy with a simpler model framework, fewer parameters, and reduced FLOPs. Specifically, on the UAV123 benchmark, TrackingMamba outperforms the baseline model OSTtrack-256, improving AUC by 2.59% and Precision by 4.42%, while reducing parameters by 95.52% and FLOPs by 95.02%. The article also evaluates the performance and shortcomings of TrackingMamba and other advanced trackers in the complex and critical context of jungle environments, and it explores potential future research directions in UAV jungle object tracking.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

CiteScore

9.30

自引率

10.90%

发文量

563

审稿时长

4.7 months

期刊介绍:

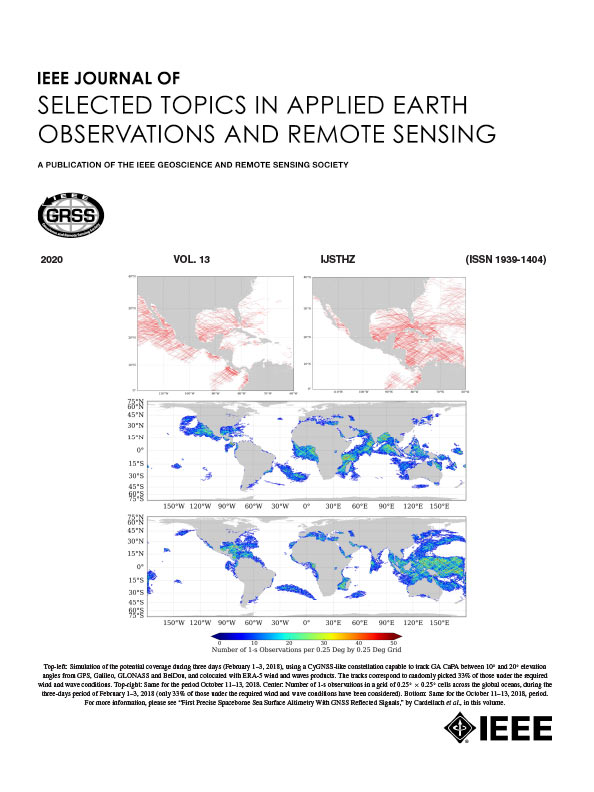

The IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing addresses the growing field of applications in Earth observations and remote sensing, and also provides a venue for the rapidly expanding special issues that are being sponsored by the IEEE Geosciences and Remote Sensing Society. The journal draws upon the experience of the highly successful “IEEE Transactions on Geoscience and Remote Sensing” and provide a complementary medium for the wide range of topics in applied earth observations. The ‘Applications’ areas encompasses the societal benefit areas of the Global Earth Observations Systems of Systems (GEOSS) program. Through deliberations over two years, ministers from 50 countries agreed to identify nine areas where Earth observation could positively impact the quality of life and health of their respective countries. Some of these are areas not traditionally addressed in the IEEE context. These include biodiversity, health and climate. Yet it is the skill sets of IEEE members, in areas such as observations, communications, computers, signal processing, standards and ocean engineering, that form the technical underpinnings of GEOSS. Thus, the Journal attracts a broad range of interests that serves both present members in new ways and expands the IEEE visibility into new areas.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: