具有$$\text {ReLU}^k$$激活函数的两层网络:巴伦空间和导数逼近

IF 2.2

2区 数学

Q1 MATHEMATICS, APPLIED

引用次数: 0

摘要

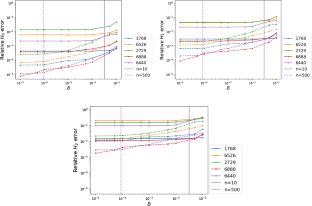

我们研究了两层网络与整流功率单元的使用,称为\(\text {ReLU}^k\)激活函数,用于函数和导数逼近。通过扩展和校准相应的巴伦空间,我们证明了具有\(\text {ReLU}^k\)激活函数的两层网络设计得很好,可以同时近似未知函数及其导数。当测量结果有噪声时,我们提出了一种Tikhonov型正则化方法,并在正则化参数选择适当时给出了误差范围。几个数值算例证明了该方法的有效性。本文章由计算机程序翻译,如有差异,请以英文原文为准。

Two-layer networks with the $$\text {ReLU}^k$$ activation function: Barron spaces and derivative approximation

We investigate the use of two-layer networks with the rectified power unit, which is called the \(\text {ReLU}^k\) activation function, for function and derivative approximation. By extending and calibrating the corresponding Barron space, we show that two-layer networks with the \(\text {ReLU}^k\) activation function are well-designed to simultaneously approximate an unknown function and its derivatives. When the measurement is noisy, we propose a Tikhonov type regularization method, and provide error bounds when the regularization parameter is chosen appropriately. Several numerical examples support the efficiency of the proposed approach.

求助全文

通过发布文献求助,成功后即可免费获取论文全文。

去求助

来源期刊

Numerische Mathematik

数学-应用数学

CiteScore

4.10

自引率

4.80%

发文量

72

审稿时长

6-12 weeks

期刊介绍:

Numerische Mathematik publishes papers of the very highest quality presenting significantly new and important developments in all areas of Numerical Analysis. "Numerical Analysis" is here understood in its most general sense, as that part of Mathematics that covers:

1. The conception and mathematical analysis of efficient numerical schemes actually used on computers (the "core" of Numerical Analysis)

2. Optimization and Control Theory

3. Mathematical Modeling

4. The mathematical aspects of Scientific Computing

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: