Sounding Robots: Design and Evaluation of Auditory Displays for Unintentional Human-Robot Interaction

IF 5.5

Q2 ROBOTICS

引用次数: 0

Abstract

Non-verbal communication is important in HRI, particularly when humans and robots do not need to actively engage in a task together, but rather they co-exist in a shared space. Robots might still need to communicate states such as urgency or availability, and where they intend to go, to avoid collisions and disruptions. Sounds could be used to communicate such states and intentions in an intuitive and non-disruptive way. Here, we propose a multi-layer classification system for displaying various robot information simultaneously via sound. We first conceptualise which robot features could be displayed (robot size, speed, availability for interaction, urgency, and directionality); we then map them to a set of audio parameters. The designed sounds were then evaluated in 5 online studies, where people listened to the sounds and were asked to identify the associated robot features. The sounds were generally understood as intended by participants, especially when they were evaluated one feature at a time, and partially when they were evaluated two features simultaneously. The results of these evaluations suggest that sounds can be successfully used to communicate robot states and intended actions implicitly and intuitively.发声机器人:无意识人机交互听觉显示的设计与评价

非语言交流在HRI中很重要,特别是当人类和机器人不需要一起积极参与任务,而是在共享空间中共存时。机器人可能仍然需要沟通紧急或可用性等状态,以及它们打算去哪里,以避免碰撞和中断。声音可以用一种直观和非破坏性的方式来传达这种状态和意图。在这里,我们提出了一个多层分类系统,通过声音同时显示各种机器人信息。我们首先概念化哪些机器人特征可以被显示(机器人的大小,速度,交互的可用性,紧迫性和方向性);然后我们将它们映射到一组音频参数。然后在5项在线研究中对设计的声音进行评估,在这些研究中,人们听了这些声音,并被要求识别相关的机器人特征。这些声音通常被参与者理解为有意的,尤其是当他们一次评估一个特征时,以及同时评估两个特征时。这些评估的结果表明,声音可以成功地用于隐式和直观地传达机器人状态和预期动作。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

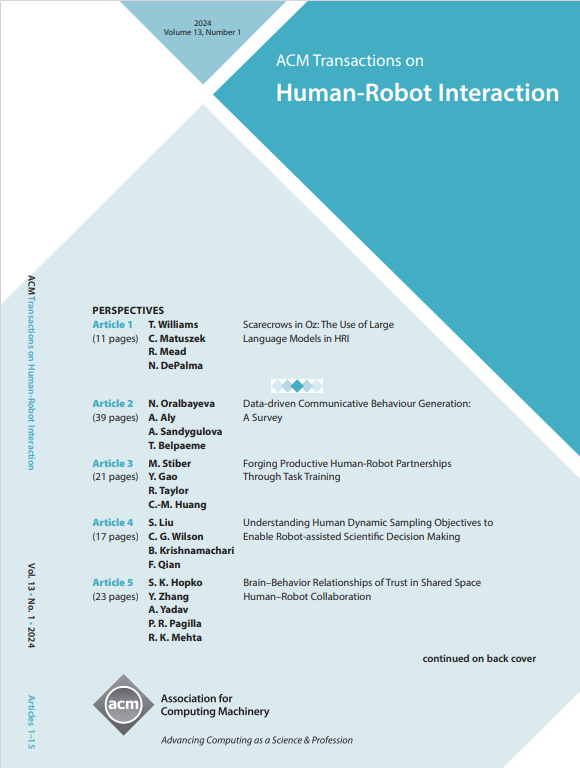

来源期刊

ACM Transactions on Human-Robot Interaction

Computer Science-Artificial Intelligence

CiteScore

7.70

自引率

5.90%

发文量

65

期刊介绍:

ACM Transactions on Human-Robot Interaction (THRI) is a prestigious Gold Open Access journal that aspires to lead the field of human-robot interaction as a top-tier, peer-reviewed, interdisciplinary publication. The journal prioritizes articles that significantly contribute to the current state of the art, enhance overall knowledge, have a broad appeal, and are accessible to a diverse audience. Submissions are expected to meet a high scholarly standard, and authors are encouraged to ensure their research is well-presented, advancing the understanding of human-robot interaction, adding cutting-edge or general insights to the field, or challenging current perspectives in this research domain.

THRI warmly invites well-crafted paper submissions from a variety of disciplines, encompassing robotics, computer science, engineering, design, and the behavioral and social sciences. The scholarly articles published in THRI may cover a range of topics such as the nature of human interactions with robots and robotic technologies, methods to enhance or enable novel forms of interaction, and the societal or organizational impacts of these interactions. The editorial team is also keen on receiving proposals for special issues that focus on specific technical challenges or that apply human-robot interaction research to further areas like social computing, consumer behavior, health, and education.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: