Improving Wikipedia verifiability with AI

IF 18.8

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

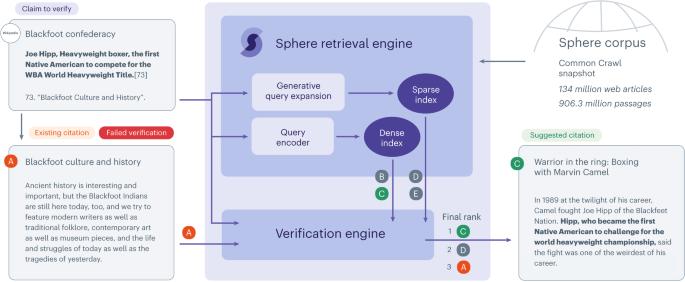

Verifiability is a core content policy of Wikipedia: claims need to be backed by citations. Maintaining and improving the quality of Wikipedia references is an important challenge and there is a pressing need for better tools to assist humans in this effort. We show that the process of improving references can be tackled with the help of artificial intelligence (AI) powered by an information retrieval system and a language model. This neural-network-based system, which we call SIDE, can identify Wikipedia citations that are unlikely to support their claims, and subsequently recommend better ones from the web. We train this model on existing Wikipedia references, therefore learning from the contributions and combined wisdom of thousands of Wikipedia editors. Using crowdsourcing, we observe that for the top 10% most likely citations to be tagged as unverifiable by our system, humans prefer our system’s suggested alternatives compared with the originally cited reference 70% of the time. To validate the applicability of our system, we built a demo to engage with the English-speaking Wikipedia community and find that SIDE’s first citation recommendation is preferred twice as often as the existing Wikipedia citation for the same top 10% most likely unverifiable claims according to SIDE. Our results indicate that an AI-based system could be used, in tandem with humans, to improve the verifiability of Wikipedia. The immense amount of Wikipedia articles makes it challenging for volunteers to ensure that cited sources support the claim they are attached to. Petroni et al. use an information-retrieval model to assist Wikipedia users in improving verifiability.

用人工智能提高维基百科的可验证性

可验证性是维基百科的一项核心内容政策:维基百科的声明需要有引文支持。维护和提高维基百科参考文献的质量是一项重要挑战,因此迫切需要更好的工具来协助人类完成这项工作。我们的研究表明,改进参考文献的过程可以借助由信息检索系统和语言模型驱动的人工智能(AI)来完成。这个基于神经网络的系统(我们称之为 SIDE)可以识别出不可能支持其主张的维基百科引文,并随后从网上推荐更好的引文。我们在现有的维基百科参考文献上训练这个模型,从而从成千上万维基百科编辑的贡献和智慧中学习。通过众包,我们观察到,对于最有可能被我们的系统标记为不可验证的前 10%的引文,与最初引用的参考文献相比,人类在 70% 的情况下更喜欢我们系统推荐的替代方案。为了验证我们系统的适用性,我们制作了一个演示,与讲英语的维基百科社区进行互动,结果发现,对于根据 SIDE 最有可能无法验证的前 10%声明,SIDE 的首次引文推荐比现有的维基百科引文更受青睐,而前者是后者的两倍。我们的研究结果表明,基于人工智能的系统可以与人类协同使用,提高维基百科的可验证性。维基百科的文章数量庞大,因此志愿者要确保引用的资料来源支持其所附的声明具有挑战性。Petroni 等人利用信息检索模型帮助维基百科用户提高可验证性。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Nature Machine Intelligence

Multiple-

CiteScore

36.90

自引率

2.10%

发文量

127

期刊介绍:

Nature Machine Intelligence is a distinguished publication that presents original research and reviews on various topics in machine learning, robotics, and AI. Our focus extends beyond these fields, exploring their profound impact on other scientific disciplines, as well as societal and industrial aspects. We recognize limitless possibilities wherein machine intelligence can augment human capabilities and knowledge in domains like scientific exploration, healthcare, medical diagnostics, and the creation of safe and sustainable cities, transportation, and agriculture. Simultaneously, we acknowledge the emergence of ethical, social, and legal concerns due to the rapid pace of advancements.

To foster interdisciplinary discussions on these far-reaching implications, Nature Machine Intelligence serves as a platform for dialogue facilitated through Comments, News Features, News & Views articles, and Correspondence. Our goal is to encourage a comprehensive examination of these subjects.

Similar to all Nature-branded journals, Nature Machine Intelligence operates under the guidance of a team of skilled editors. We adhere to a fair and rigorous peer-review process, ensuring high standards of copy-editing and production, swift publication, and editorial independence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: