Explainable neural networks that simulate reasoning

IF 12

Q1 COMPUTER SCIENCE, INTERDISCIPLINARY APPLICATIONS

引用次数: 14

Abstract

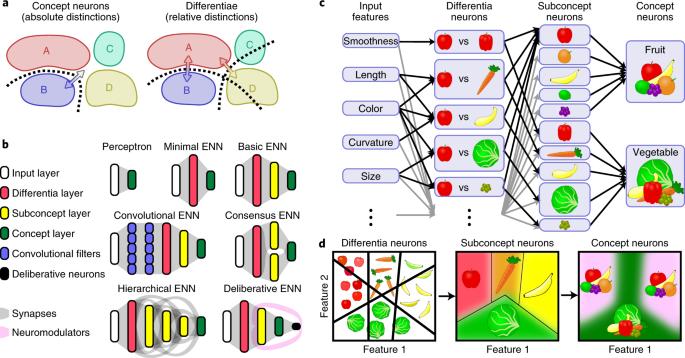

The success of deep neural networks suggests that cognition may emerge from indecipherable patterns of distributed neural activity. Yet these networks are pattern-matching black boxes that cannot simulate higher cognitive functions and lack numerous neurobiological features. Accordingly, they are currently insufficient computational models for understanding neural information processing. Here, we show how neural circuits can directly encode cognitive processes via simple neurobiological principles. To illustrate, we implemented this model in a non-gradient-based machine learning algorithm to train deep neural networks called essence neural networks (ENNs). Neural information processing in ENNs is intrinsically explainable, even on benchmark computer vision tasks. ENNs can also simulate higher cognitive functions such as deliberation, symbolic reasoning and out-of-distribution generalization. ENNs display network properties associated with the brain, such as modularity, distributed and localist firing, and adversarial robustness. ENNs establish a broad computational framework to decipher the neural basis of cognition and pursue artificial general intelligence. The authors demonstrate how neural systems can encode cognitive functions, and use the proposed model to train robust, scalable deep neural networks that are explainable and capable of symbolic reasoning and domain generalization.

模拟推理的可解释神经网络

深度神经网络的成功表明,认知可能来自分布式神经活动的难以解读的模式。然而,这些网络只是模式匹配的黑盒子,无法模拟高级认知功能,也缺乏大量神经生物学特征。因此,它们目前还不足以成为理解神经信息处理的计算模型。在这里,我们展示了神经回路如何通过简单的神经生物学原理直接编码认知过程。为了说明这一点,我们在一种非梯度机器学习算法中实现了这一模型,以训练被称为本质神经网络(ENNs)的深度神经网络。即使是在基准计算机视觉任务中,本质神经网络中的神经信息处理也是可以解释的。本质神经网络还能模拟更高级的认知功能,如深思熟虑、符号推理和分布外概括。ENNs 显示了与大脑相关的网络特性,如模块化、分布式和局部发射以及对抗鲁棒性。ENNs 建立了一个广泛的计算框架,用于破译认知的神经基础和追求人工通用智能。作者展示了神经系统如何编码认知功能,并利用所提出的模型来训练鲁棒的、可扩展的深度神经网络,这些网络可解释并能够进行符号推理和领域泛化。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: