Algorithms to estimate Shapley value feature attributions

IF 23.9

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 28

Abstract

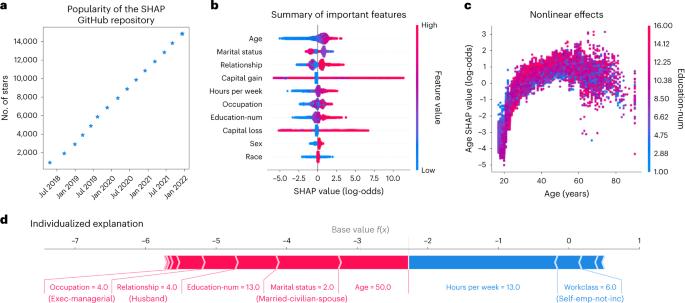

Feature attributions based on the Shapley value are popular for explaining machine learning models. However, their estimation is complex from both theoretical and computational standpoints. We disentangle this complexity into two main factors: the approach to removing feature information and the tractable estimation strategy. These two factors provide a natural lens through which we can better understand and compare 24 distinct algorithms. Based on the various feature-removal approaches, we describe the multiple types of Shapley value feature attributions and the methods to calculate each one. Then, based on the tractable estimation strategies, we characterize two distinct families of approaches: model-agnostic and model-specific approximations. For the model-agnostic approximations, we benchmark a wide class of estimation approaches and tie them to alternative yet equivalent characterizations of the Shapley value. For the model-specific approximations, we clarify the assumptions crucial to each method’s tractability for linear, tree and deep models. Finally, we identify gaps in the literature and promising future research directions. There are numerous algorithms for generating Shapley value explanations. The authors provide a comprehensive survey of Shapley value feature attribution algorithms by disentangling and clarifying the fundamental challenges underlying their computation.

Shapley值特征属性估计算法

基于夏普利值的特征归因是解释机器学习模型的常用方法。然而,从理论和计算的角度来看,其估算都很复杂。我们将这种复杂性分解为两个主要因素:去除特征信息的方法和可操作的估计策略。这两个因素为我们更好地理解和比较 24 种不同的算法提供了一个自然的视角。根据不同的特征去除方法,我们描述了多种类型的 Shapley 值特征归因以及计算每种归因的方法。然后,基于可操作的估算策略,我们描述了两个不同的方法系列:与模型无关的近似和特定模型的近似。对于与模型无关的近似方法,我们会对各种估计方法进行基准测试,并将它们与夏普利值的其他等效特征联系起来。对于针对特定模型的近似方法,我们明确了每种方法对于线性、树型和深度模型的可操作性的关键假设。最后,我们指出了文献中的空白和未来有希望的研究方向。生成夏普利值解释的算法有很多。作者对 Shapley 值特征归因算法进行了全面考察,对其计算所面临的基本挑战进行了剖析和澄清。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Nature Machine Intelligence

Multiple-

CiteScore

36.90

自引率

2.10%

发文量

127

期刊介绍:

Nature Machine Intelligence is a distinguished publication that presents original research and reviews on various topics in machine learning, robotics, and AI. Our focus extends beyond these fields, exploring their profound impact on other scientific disciplines, as well as societal and industrial aspects. We recognize limitless possibilities wherein machine intelligence can augment human capabilities and knowledge in domains like scientific exploration, healthcare, medical diagnostics, and the creation of safe and sustainable cities, transportation, and agriculture. Simultaneously, we acknowledge the emergence of ethical, social, and legal concerns due to the rapid pace of advancements.

To foster interdisciplinary discussions on these far-reaching implications, Nature Machine Intelligence serves as a platform for dialogue facilitated through Comments, News Features, News & Views articles, and Correspondence. Our goal is to encourage a comprehensive examination of these subjects.

Similar to all Nature-branded journals, Nature Machine Intelligence operates under the guidance of a team of skilled editors. We adhere to a fair and rigorous peer-review process, ensuring high standards of copy-editing and production, swift publication, and editorial independence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: