Quantum entropy structural encoding for graph neural networks

IF 7.6

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

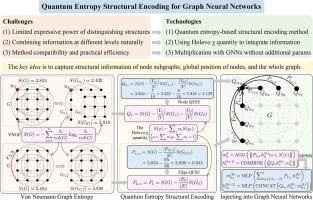

Structural encoding (SE) can improve the expressive power of Graph Neural Networks (GNNs). However, current SE methods have limited expressive power because they have limitations in capturing (1) node subgraphs, (2) global position of nodes, and (3) global structure of the graph. To tackle this challenge, we propose a Quantum Entropy Structural Encoding (QESE) for GNNs. For limitations (1) and (3), we employ quantum entropy on node subgraphs and the whole graph to recognize highly similar structures. For limitation (2), we apply quantum entropy on complement parts of node subgraphs for locating node positions. Then, we obtain QESE by integrating quantum entropies of these three parts through the Holevo quantity. Notably, we prove that QESE always captures structural distinction in node subgraphs and the whole graph, and the Holevo quantity empowers QESE to represent global position of nodes. We theoretically show that QESE distinguishes strongly regular graphs that 3-WL fails to, and has the potential to be more powerful than -WL (>3). We adopt a plug-and-play approach to inject QESE with existing GNNs, and further design an approximated version to reduce computational complexity. Experimental results show that QESE uplifts the expressive power of GNNs beyond 3-WL and indeed captures node subgraphs. Furthermore, QESE improves the performance of various GNNs in graph learning tasks and also surpasses other SE methods.

图神经网络的量子熵结构编码

结构编码(SE)可以提高图神经网络的表达能力。然而,目前的SE方法表达能力有限,因为它们在捕获(1)节点子图,(2)节点的全局位置,以及(3)图的全局结构方面存在局限性。为了解决这个问题,我们提出了一种用于gnn的量子熵结构编码(QESE)。对于限制(1)和(3),我们在节点子图和整个图上使用量子熵来识别高度相似的结构。对于限制(2),我们在节点子图的补部分上应用量子熵来定位节点位置。然后,通过Holevo χ量对这三个部分的量子熵进行积分,得到QESE。值得注意的是,我们证明了QESE总是捕获节点子图和整个图的结构区别,并且Holevo χ量使QESE能够表示节点的全局位置。我们从理论上证明了QESE可以识别出3- wl无法识别的强正则图,并且有可能比k-WL (k>3)更强大。我们采用即插即用的方法将QESE注入现有gnn,并进一步设计近似版本以降低计算复杂度。实验结果表明,QESE提高了gnn在3-WL之外的表达能力,并且确实捕获了节点子图。此外,QESE提高了各种gnn在图学习任务中的性能,也优于其他SE方法。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Knowledge-Based Systems

工程技术-计算机:人工智能

CiteScore

14.80

自引率

12.50%

发文量

1245

审稿时长

7.8 months

期刊介绍:

Knowledge-Based Systems, an international and interdisciplinary journal in artificial intelligence, publishes original, innovative, and creative research results in the field. It focuses on knowledge-based and other artificial intelligence techniques-based systems. The journal aims to support human prediction and decision-making through data science and computation techniques, provide a balanced coverage of theory and practical study, and encourage the development and implementation of knowledge-based intelligence models, methods, systems, and software tools. Applications in business, government, education, engineering, and healthcare are emphasized.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: