Modelling neural coding in the auditory midbrain with high resolution and accuracy

IF 23.9

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

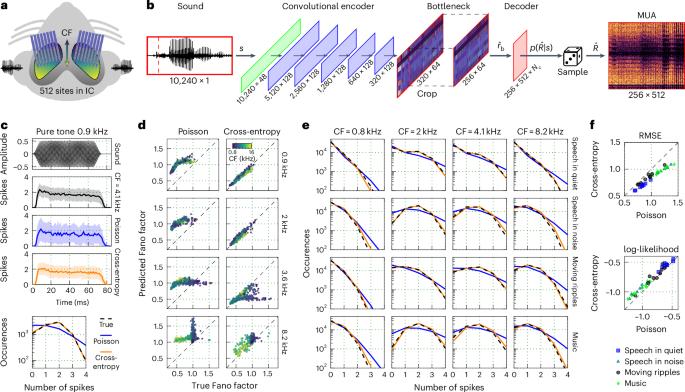

Computational models of auditory processing can be valuable tools for research and technology development. Models of the cochlea are highly accurate and widely used, but models of the auditory brain lag far behind in both performance and penetration. Here we present ICNet, a convolutional encoder–decoder model of neural coding in the inferior colliculus. We developed ICNet using large-scale intracranial recordings from anaesthetized gerbils, addressing three key modelling challenges that are common across all sensory systems: capturing the full statistical structure of neuronal response patterns; accounting for physiological and experimental non-stationarity; and extracting features of sensory processing that are shared across different brains. ICNet provides highly accurate simulation of multi-unit neural responses to a wide range of complex sounds, including near-perfect responses to speech. It also reproduces key neurophysiological phenomena such as forward masking and dynamic range adaptation. ICNet can be used to simulate activity from thousands of neural units or to provide a compact representation of early central auditory processing through its latent dynamics, facilitating a wide range of hearing and audio applications. It can also serve as a foundation core, providing a baseline neural representation for models of active listening or higher-level auditory processing. Drakopoulos et al. present a model that captures the transformation from sound waves to neural activity patterns underlying early auditory processing. The model reproduces neural responses to a range of complex sounds and key neurophysiological phenomena.

高分辨率、高准确度的中脑听觉神经编码建模

听觉处理的计算模型可以成为研究和技术开发的宝贵工具。耳蜗模型精度高,应用广泛,但听觉脑模型在性能和穿透性方面都远远落后。在这里,我们提出了ICNet,一个卷积编码器-解码器模型的神经编码在下丘。我们使用麻醉沙鼠的大规模颅内记录开发了ICNet,解决了所有感觉系统中常见的三个关键建模挑战:捕获神经元反应模式的完整统计结构;考虑生理和实验的非平稳性;提取不同大脑共有的感觉处理特征。ICNet提供了对各种复杂声音的多单元神经反应的高度精确模拟,包括对语音的近乎完美的反应。它还再现了关键的神经生理现象,如前向掩蔽和动态范围适应。ICNet可用于模拟来自数千个神经单元的活动,或通过其潜在动态提供早期中枢听觉处理的紧凑表示,促进广泛的听力和音频应用。它也可以作为基础核心,为主动倾听模型或更高层次的听觉处理提供基线神经表征。Drakopoulos等人提出了一个模型,该模型捕捉了从声波到早期听觉处理背后的神经活动模式的转换。该模型再现了对一系列复杂声音和关键神经生理现象的神经反应。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Nature Machine Intelligence

Multiple-

CiteScore

36.90

自引率

2.10%

发文量

127

期刊介绍:

Nature Machine Intelligence is a distinguished publication that presents original research and reviews on various topics in machine learning, robotics, and AI. Our focus extends beyond these fields, exploring their profound impact on other scientific disciplines, as well as societal and industrial aspects. We recognize limitless possibilities wherein machine intelligence can augment human capabilities and knowledge in domains like scientific exploration, healthcare, medical diagnostics, and the creation of safe and sustainable cities, transportation, and agriculture. Simultaneously, we acknowledge the emergence of ethical, social, and legal concerns due to the rapid pace of advancements.

To foster interdisciplinary discussions on these far-reaching implications, Nature Machine Intelligence serves as a platform for dialogue facilitated through Comments, News Features, News & Views articles, and Correspondence. Our goal is to encourage a comprehensive examination of these subjects.

Similar to all Nature-branded journals, Nature Machine Intelligence operates under the guidance of a team of skilled editors. We adhere to a fair and rigorous peer-review process, ensuring high standards of copy-editing and production, swift publication, and editorial independence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: