Explainable and generalizable AI for AGC dispatch with heterogeneous generation units: A case study using graph convolutional networks

IF 9.6

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

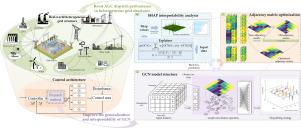

Automatic generation control (AGC) dispatch is essential for maintaining frequency stability and power balance in modern grids with high renewable penetration. Conventional optimization and machine learning methods either incur heavy computational costs or act as black-box models, which limits interpretability and generalization in safety–critical operations. To overcome these gaps, we propose an explainable and generalizable framework that integrates graph convolutional networks (GCNs) with Shapley additive explanations (SHAP). SHAP provides quantitative feature attributions, revealing spatiotemporal variability and redundancy, while the derived insights are used to iteratively optimize the GCN adjacency matrix and capture inter-generator dependencies more effectively. This closed-loop design enhances both model transparency and robustness. Case studies on a two-area load frequency control (LFC) system and a provincial power grid in China show consistent improvements: in the LFC model, frequency deviation, power deviation, and ACE are reduced by 14.30%, 58.95%, and 29.22%, respectively; in the provincial grid, ACE overshoot decreases by 99.52%, frequency deviation by 80.67%, and power overshoot is eliminated, with correction distance reduced by up to 55.24%. These results demonstrate that explainability-driven graph learning can significantly improve the reliability and adaptability of AI-based AGC dispatch in complex, heterogeneous power systems.

具有异构发电单元的AGC调度的可解释和可推广的人工智能:使用图卷积网络的案例研究

在可再生能源普及率高的现代电网中,自动发电控制(AGC)调度对于保持频率稳定和功率平衡至关重要。传统的优化和机器学习方法要么产生大量的计算成本,要么充当黑盒模型,这限制了安全关键操作的可解释性和通用性。为了克服这些差距,我们提出了一个可解释和可推广的框架,该框架将图卷积网络(GCNs)与Shapley加性解释(SHAP)集成在一起。SHAP提供定量特征归因,揭示时空变异性和冗余,而派生的见解用于迭代优化GCN邻接矩阵并更有效地捕获生成器之间的依赖关系。这种闭环设计提高了模型透明性和鲁棒性。两区负荷频率控制(LFC)系统和中国省级电网的案例研究表明,在LFC模型下,频率偏差、功率偏差和ACE分别降低了14.30%、58.95%和29.22%;省网ACE超调减小99.52%,频率偏差减小80.67%,消除功率超调,校正距离减小55.24%。这些结果表明,可解释性驱动的图学习可以显著提高复杂异构电力系统中基于ai的AGC调度的可靠性和适应性。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Energy and AI

Engineering-Engineering (miscellaneous)

CiteScore

16.50

自引率

0.00%

发文量

64

审稿时长

56 days

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: