PIONEER: improving the robustness of student models when compressing pre-trained models of code

Abstract

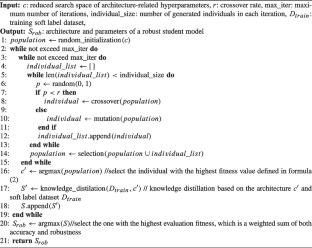

Pre-trained models of code have shown significant effectiveness in a variety of software engineering tasks, but they are difficult for local deployment due to their large size. Existing works mainly focus on compressing these large models into small models to achieve similar performance and efficient inference. However, it is ignored that the small models should be robust enough to deal with adversarial examples that make incorrect predictions to users. Knowledge distillation techniques typically transform the model compression problem into a combinatorial optimization problem of the student architecture space to achieve the best student model performance. But they can only improve the robustness of the student model to a limited extent through traditional adversarial training. This paper proposes PIONEER (ImProvIng the RObustness of StudeNt ModEls WhEn CompRessing Code Models), a novel knowledge distillation technique that enhances the robustness of the student model without requiring adversarial training. PIONEER incorporates robustness evaluation during distillation to guide the optimization of the student model architecture. By using the probability distributions of original examples and adversarial examples as soft labels, the student model learns the features of both the original samples and adversarial examples during training. We conduct experimental evaluations on two downstream tasks (vulnerability prediction and clone detection) for the three models (CodeBERT, GraphCodeBERT, and CodeT5). We utilize PIONEER to compress six downstream task models to small (3 MB) models that are 206\(\times\) smaller than the original size. The results show that compressed models reduce the inference latency (76\(\times\)) and improve the robustness of the model (87.54%) with negligible loss of effectiveness (1.67%).

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: