Your image generator is your new private dataset

IF 4.2

3区 计算机科学

Q2 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

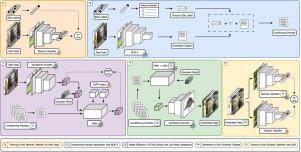

Generative diffusion models have emerged as powerful tools to synthetically produce training data, offering potential solutions to data scarcity and reducing labelling costs for downstream supervised deep learning applications. However, existing approaches for synthetic dataset generation face significant limitations: previous methods like Knowledge Recycling rely on label-conditioned generation with models trained from scratch, limiting flexibility and requiring extensive computational resources, while simple class-based conditioning fails to capture the semantic diversity and intra-class variations found in real datasets. Additionally, effectively leveraging text-conditioned image generation for building classifier training sets requires addressing key issues: constructing informative textual prompts, adapting generative models to specific domains, and ensuring robust performance. This paper proposes the Text-Conditioned Knowledge Recycling (TCKR) pipeline to tackle these challenges. TCKR combines dynamic image captioning, parameter-efficient diffusion model fine-tuning, and Generative Knowledge Distillation techniques to create synthetic datasets tailored for image classification. The pipeline is rigorously evaluated on ten diverse image classification benchmarks. The results demonstrate that models trained solely on TCKR-generated data achieve classification accuracies on par with (and in several cases exceeding) models trained on real images. Furthermore, the evaluation reveals that these synthetic-data-trained models exhibit substantially enhanced privacy characteristics: their vulnerability to Membership Inference Attacks is significantly reduced, with the membership inference AUC lowered by 5.49 points on average compared to using real training data, demonstrating a substantial improvement in the performance-privacy trade-off. These findings indicate that high-fidelity synthetic data can effectively replace real data for training classifiers, yielding strong performance whilst simultaneously providing improved privacy protection as a valuable emergent property. The code and trained models are available in the accompanying open-source repository.

您的图像生成器是您的新私有数据集

生成扩散模型已经成为综合生成训练数据的强大工具,为下游监督深度学习应用提供了数据稀缺和降低标签成本的潜在解决方案。然而,现有的合成数据集生成方法面临着显著的局限性:以前的方法,如知识回收,依赖于从头训练模型的标签条件生成,限制了灵活性,需要大量的计算资源,而简单的基于类的条件反射无法捕获真实数据集中发现的语义多样性和类内变化。此外,有效地利用文本条件图像生成来构建分类器训练集需要解决关键问题:构建信息文本提示,使生成模型适应特定领域,并确保健壮的性能。本文提出了文本条件知识回收(TCKR)管道来解决这些挑战。TCKR结合了动态图像字幕、参数高效扩散模型微调和生成知识蒸馏技术来创建适合图像分类的合成数据集。该管道在十种不同的图像分类基准上进行了严格的评估。结果表明,仅在tckr生成的数据上训练的模型实现的分类精度与在真实图像上训练的模型相当(在某些情况下甚至超过)。此外,评估显示,这些合成数据训练的模型显示出显着增强的隐私特征:它们对成员推理攻击的脆弱性显着降低,与使用真实训练数据相比,成员推理AUC平均降低了5.49点,表明性能-隐私权衡有了显着改善。这些发现表明,高保真合成数据可以有效地代替真实数据用于训练分类器,在产生强大性能的同时,作为一种有价值的紧急属性,提供了更好的隐私保护。代码和经过训练的模型可以在随附的开源存储库中获得。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Image and Vision Computing

工程技术-工程:电子与电气

CiteScore

8.50

自引率

8.50%

发文量

143

审稿时长

7.8 months

期刊介绍:

Image and Vision Computing has as a primary aim the provision of an effective medium of interchange for the results of high quality theoretical and applied research fundamental to all aspects of image interpretation and computer vision. The journal publishes work that proposes new image interpretation and computer vision methodology or addresses the application of such methods to real world scenes. It seeks to strengthen a deeper understanding in the discipline by encouraging the quantitative comparison and performance evaluation of the proposed methodology. The coverage includes: image interpretation, scene modelling, object recognition and tracking, shape analysis, monitoring and surveillance, active vision and robotic systems, SLAM, biologically-inspired computer vision, motion analysis, stereo vision, document image understanding, character and handwritten text recognition, face and gesture recognition, biometrics, vision-based human-computer interaction, human activity and behavior understanding, data fusion from multiple sensor inputs, image databases.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: