Def-Ag: An energy-efficient decentralized federated learning framework via aggregator clients

IF 6.2

2区 计算机科学

Q1 COMPUTER SCIENCE, THEORY & METHODS

Future Generation Computer Systems-The International Journal of Escience

Pub Date : 2025-09-06

DOI:10.1016/j.future.2025.108114

引用次数: 0

Abstract

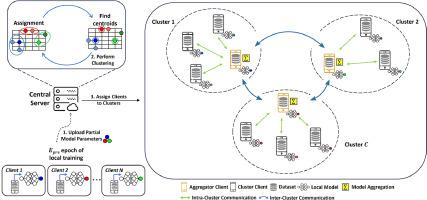

Federated Learning (FL) has revolutionized Artificial Intelligence (AI) by enabling decentralized model training across diverse datasets, thereby addressing privacy concerns. However, traditional FL relies on a centralized server, leading to latency, single-point failures, and trust issues. Decentralized Federated Learning (DFL) emerges as a promising solution, but it faces challenges in achieving optimal accuracy and convergence due to limited client interactions, requiring energy inefficiency. Moreover, balancing the personalization and generalization of the AI model in DFL remains a complex issue. To address those challenging problems, this paper presents Def-Ag, an innovative energy-efficient DFL framework utilizing aggregator clients within similarity-based clusters. To reduce this signaling overhead, a partial model information exchange is proposed in intra-cluster training. In addition, the knowledge distillation method is applied for inter-cluster training to carefully incorporate the knowledge between clusters. Finally, by integrating clustering-based hierarchical DFL and optimizing client selection, Def-Ag reduces energy consumption and communication overhead while balancing personalization and generalization. Extensive experiments on CIFAR-10 and FMNIST datasets confirm Def-Ag’s superior performance in reducing energy usage and maintaining learning accuracy compared to baseline methods. The results demonstrate that Def-Ag effectively balances personalization and generalization, providing a robust solution for energy-efficient decentralized federated learning systems.

Def-Ag:一个通过聚合器客户端的节能分散的联邦学习框架

联邦学习(FL)通过支持跨不同数据集的分散模型训练,从而解决隐私问题,彻底改变了人工智能(AI)。然而,传统的FL依赖于集中式服务器,导致延迟、单点故障和信任问题。分散式联邦学习(DFL)是一种很有前途的解决方案,但由于客户端交互有限,需要能源效率低下,因此在实现最佳准确性和收敛性方面面临挑战。此外,人工智能模型在DFL中的个性化和泛化的平衡仍然是一个复杂的问题。为了解决这些具有挑战性的问题,本文提出了Def-Ag,一个创新的节能DFL框架,利用基于相似性的集群中的聚合器客户端。为了减少这种信号开销,在簇内训练中提出了部分模型信息交换的方法。此外,在聚类间训练中采用了知识蒸馏的方法,将聚类间的知识进行了细致的融合。最后,通过集成基于聚类的分层DFL和优化客户端选择,Def-Ag在平衡个性化和泛化的同时降低了能耗和通信开销。在CIFAR-10和FMNIST数据集上进行的大量实验证实,与基线方法相比,Def-Ag在减少能源使用和保持学习准确性方面表现优异。结果表明,Def-Ag有效地平衡了个性化和泛化,为节能分散联邦学习系统提供了一个鲁棒的解决方案。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

CiteScore

19.90

自引率

2.70%

发文量

376

审稿时长

10.6 months

期刊介绍:

Computing infrastructures and systems are constantly evolving, resulting in increasingly complex and collaborative scientific applications. To cope with these advancements, there is a growing need for collaborative tools that can effectively map, control, and execute these applications.

Furthermore, with the explosion of Big Data, there is a requirement for innovative methods and infrastructures to collect, analyze, and derive meaningful insights from the vast amount of data generated. This necessitates the integration of computational and storage capabilities, databases, sensors, and human collaboration.

Future Generation Computer Systems aims to pioneer advancements in distributed systems, collaborative environments, high-performance computing, and Big Data analytics. It strives to stay at the forefront of developments in grids, clouds, and the Internet of Things (IoT) to effectively address the challenges posed by these wide-area, fully distributed sensing and computing systems.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: